Drive hyper-personalized customer experiences with Amazon Personalize and generative AI

AWS Machine Learning Blog

NOVEMBER 26, 2023

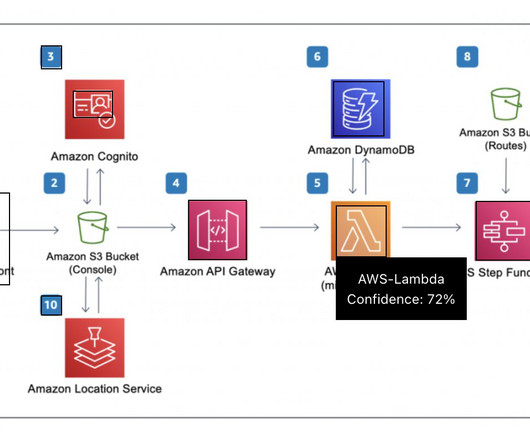

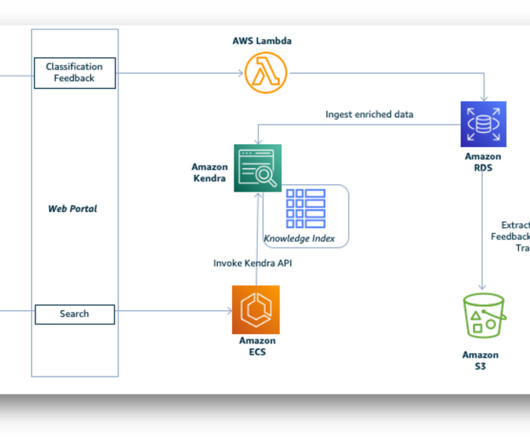

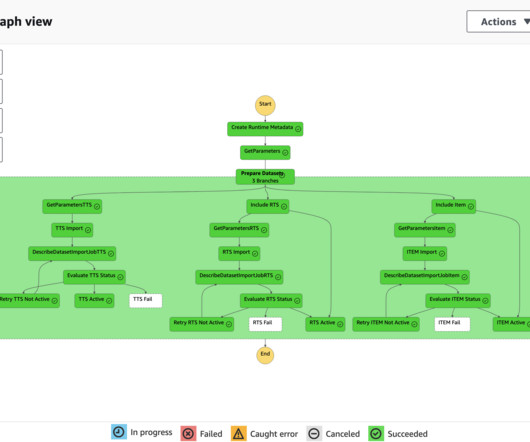

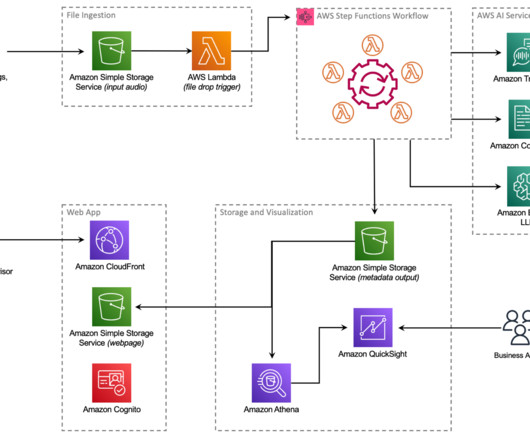

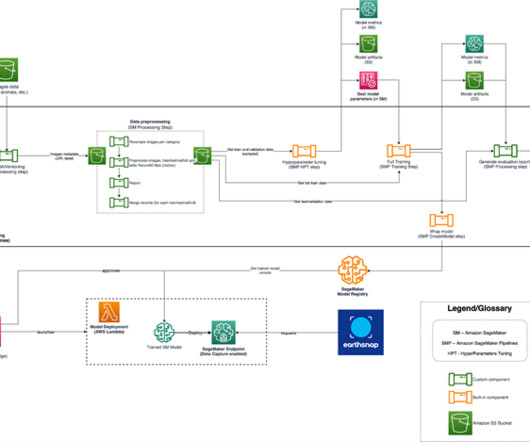

Amazon Personalize has helped us achieve high levels of automation in content customization. You follow the same process of data ingestion, training, and creating a batch inference job as in the previous use case. Getting recommendations along with metadata makes it more convenient to provide additional context to LLMs.

Let's personalize your content