Accelerating time-to-insight with MongoDB time series collections and Amazon SageMaker Canvas

AWS Machine Learning Blog

DECEMBER 18, 2023

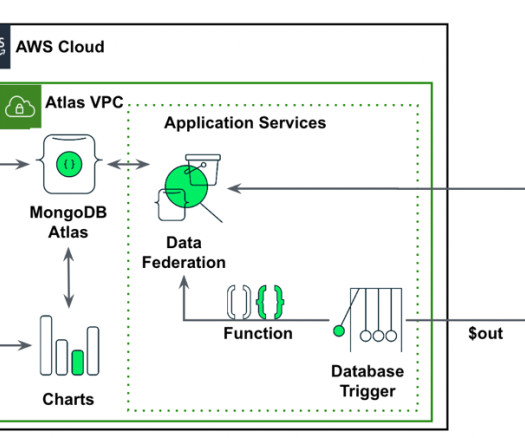

MongoDB Atlas offers automatic sharding, horizontal scalability, and flexible indexing for high-volume data ingestion. Among all, the native time series capabilities is a standout feature, making it ideal for a managing high volume of time-series data, such as business critical application data, telemetry, server logs and more.

Let's personalize your content