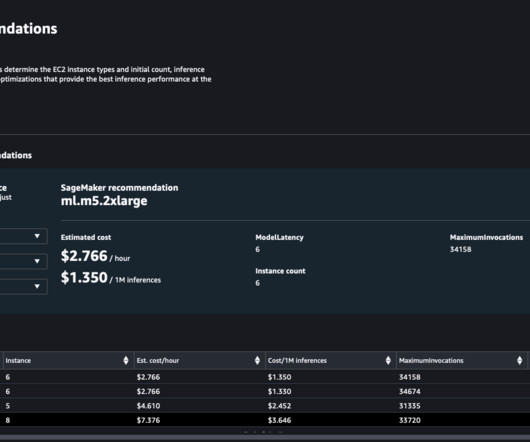

Improved ML model deployment using Amazon SageMaker Inference Recommender

AWS Machine Learning Blog

APRIL 20, 2023

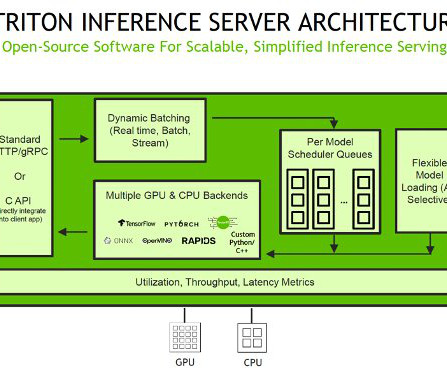

Each machine learning (ML) system has a unique service level agreement (SLA) requirement with respect to latency, throughput, and cost metrics. With advancements in hardware design, a wide range of CPU- and GPU-based infrastructures are available to help you speed up inference performance.

Let's personalize your content