TensorRT-LLM: A Comprehensive Guide to Optimizing Large Language Model Inference for Maximum Performance

Unite.AI

SEPTEMBER 13, 2024

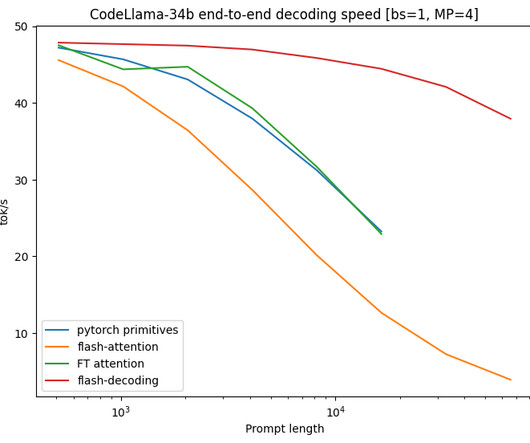

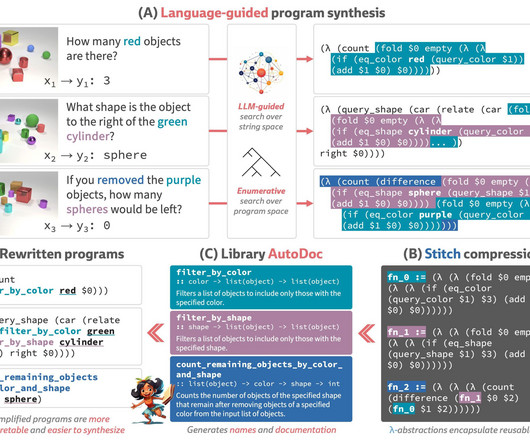

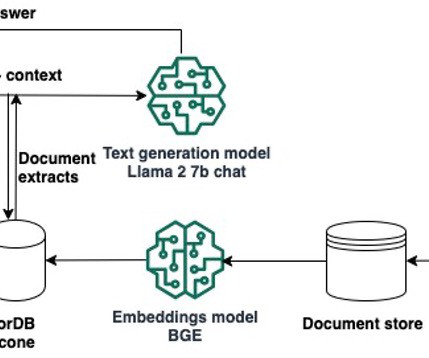

As the demand for large language models (LLMs) continues to rise, ensuring fast, efficient, and scalable inference has become more crucial than ever. NVIDIA's TensorRT-LLM steps in to address this challenge by providing a set of powerful tools and optimizations specifically designed for LLM inference.

Let's personalize your content