Making Sense of the Mess: LLMs Role in Unstructured Data Extraction

Unite.AI

MAY 29, 2024

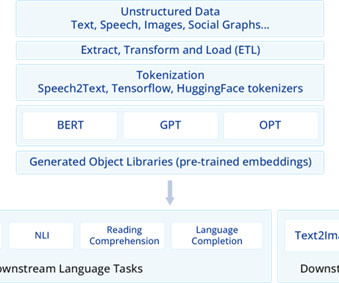

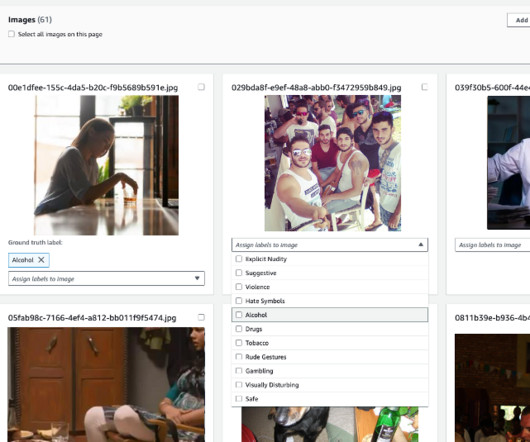

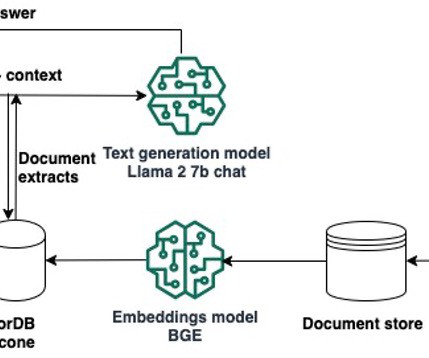

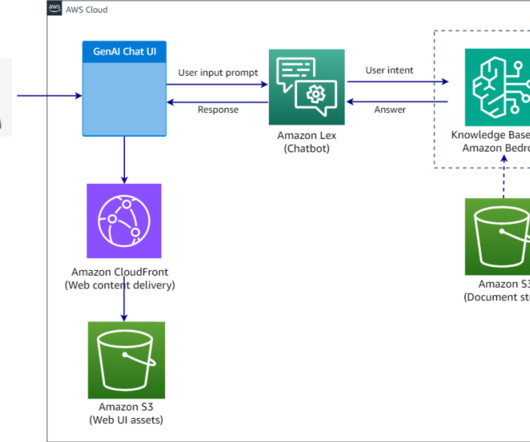

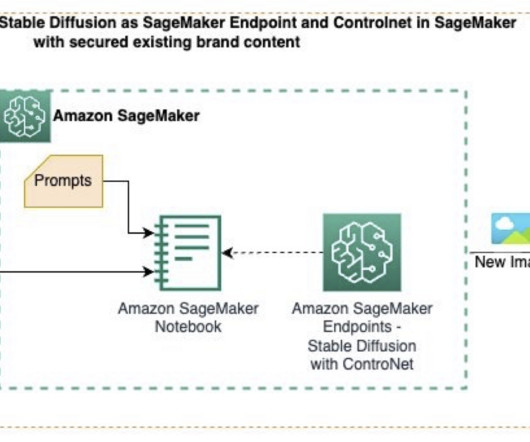

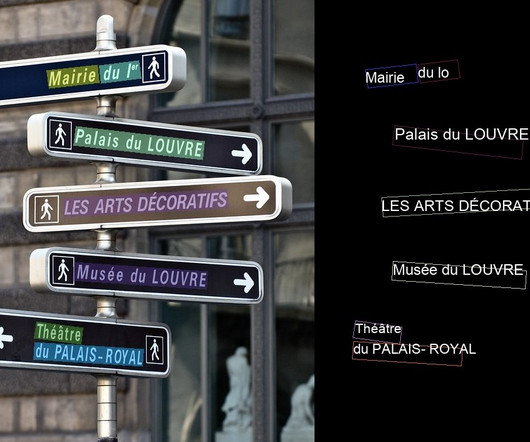

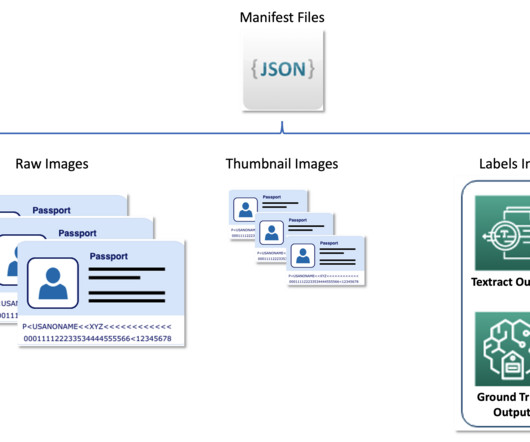

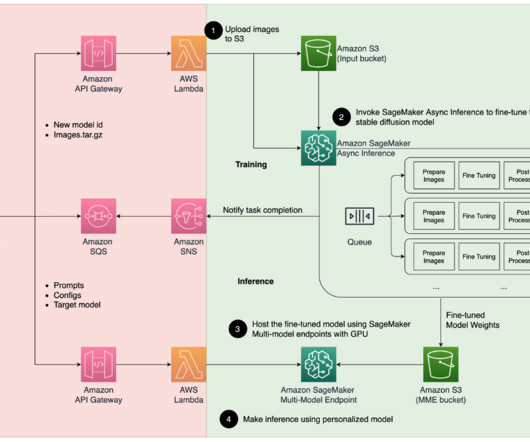

This advancement has spurred the commercial use of generative AI in natural language processing (NLP) and computer vision, enabling automated and intelligent data extraction. Image and Document Processing Multimodal LLMs have completely replaced OCR.

Let's personalize your content