Improved ML model deployment using Amazon SageMaker Inference Recommender

AWS Machine Learning Blog

APRIL 20, 2023

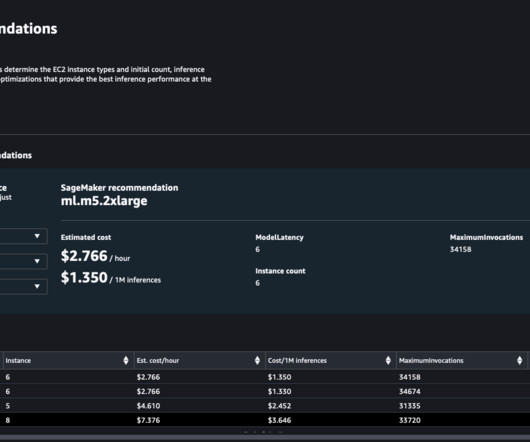

With advancements in hardware design, a wide range of CPU- and GPU-based infrastructures are available to help you speed up inference performance. Analyze the default and advanced Inference Recommender job results, which include ML instance type recommendation latency, performance, and cost metrics. sm_client = boto3.client("sagemaker",

Let's personalize your content