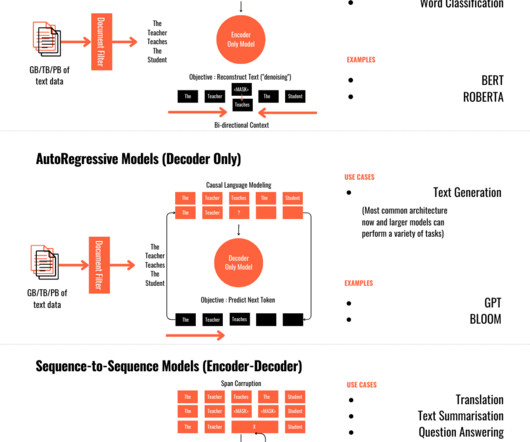

Introduction to Large Language Models (LLMs): An Overview of BERT, GPT, and Other Popular Models

John Snow Labs

JUNE 27, 2023

Prepare to be amazed as we delve into the world of Large Language Models (LLMs) – the driving force behind NLP’s remarkable progress. In this comprehensive overview, we will explore the definition, significance, and real-world applications of these game-changing models. What are Large Language Models (LLMs)?

Let's personalize your content