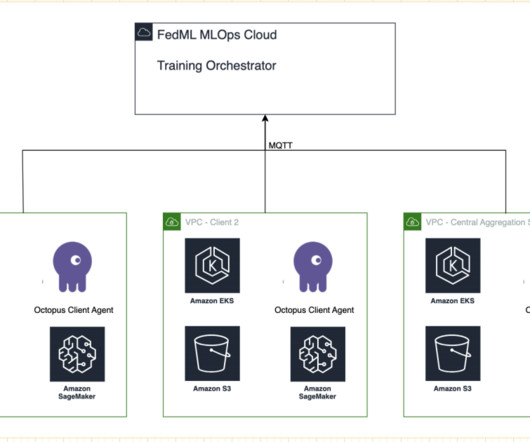

Federated learning on AWS using FedML, Amazon EKS, and Amazon SageMaker

AWS Machine Learning Blog

MARCH 15, 2024

Many organizations are implementing machine learning (ML) to enhance their business decision-making through automation and the use of large distributed datasets. With increased access to data, ML has the potential to provide unparalleled business insights and opportunities.

Let's personalize your content