sktime?—?Python Toolbox for Machine Learning with Time Series

ODSC - Open Data Science

MAY 25, 2023

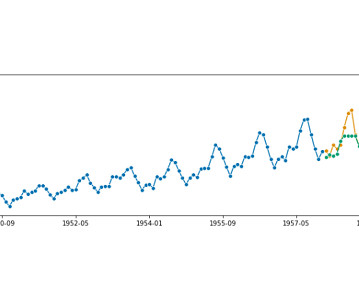

Here’s what you need to know: sktime is a Python package for time series tasks like forecasting, classification, and transformations with a familiar and user-friendly scikit-learn-like API. Build tuned auto-ML pipelines, with common interface to well-known libraries (scikit-learn, statsmodels, tsfresh, PyOD, fbprophet, and more!)

Let's personalize your content