Researchers from Fudan University and Shanghai AI Lab Introduces DOLPHIN: A Closed-Loop Framework for Automating Scientific Research with Iterative Feedback

Marktechpost

JANUARY 12, 2025

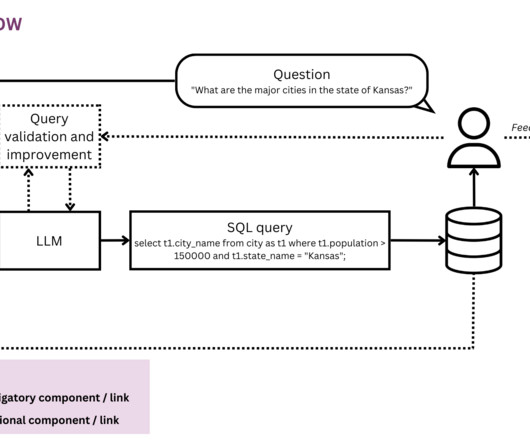

Researchers want to create a system that eventually learns to bypass humans completely by completing the research cycle without human involvement. Fudan University and the Shanghai Artificial Intelligence Laboratory have developed DOLPHIN, a closed-loop auto-research framework covering the entire scientific research process.

Let's personalize your content