What are the Different Types of Transformers in AI

Mlearning.ai

JUNE 22, 2023

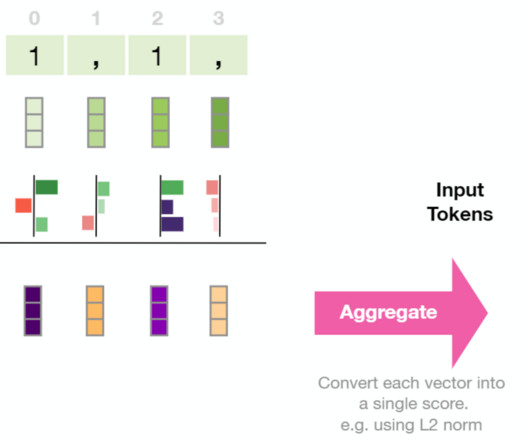

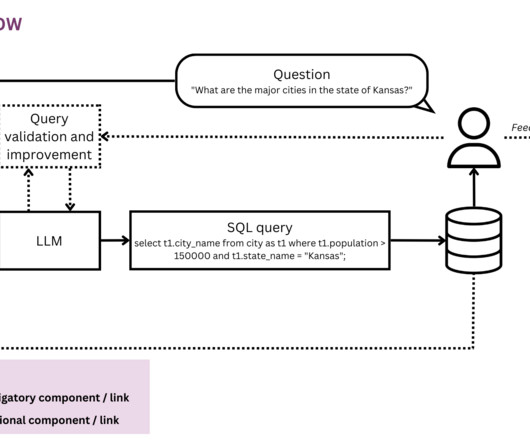

In this article, we will delve into the three broad categories of transformer models based on their training methodologies: GPT-like (auto-regressive), BERT-like (auto-encoding), and BART/T5-like (sequence-to-sequence). In such cases, we might not always have a complete sequence we are mapping to/from.

Let's personalize your content