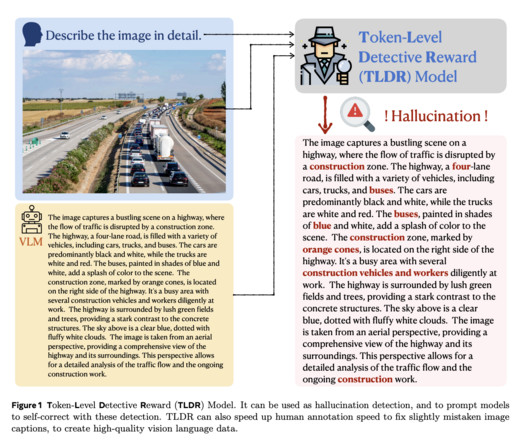

Meta AI Researchers Introduce Token-Level Detective Reward Model (TLDR) to Provide Fine-Grained Annotations for Large Vision Language Models

Marktechpost

OCTOBER 26, 2024

These approaches typically involve training reward models on human preference data and using algorithms like Proximal Policy Optimization (PPO) or Direct Policy Optimization (DPO) for policy learning. If you like our work, you will love our newsletter. Don’t Forget to join our 55k+ ML SubReddit.

Let's personalize your content