Ludwig: A Comprehensive Guide to LLM Fine Tuning using LoRA

Analytics Vidhya

MAY 8, 2024

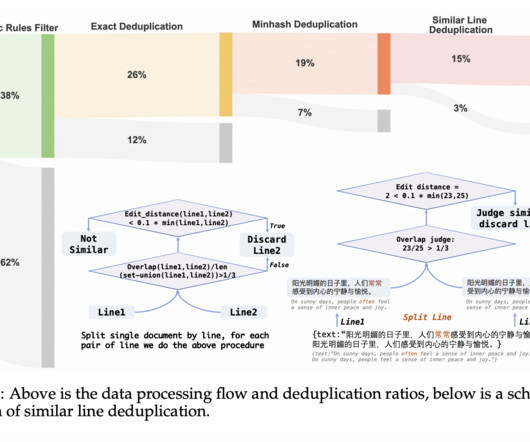

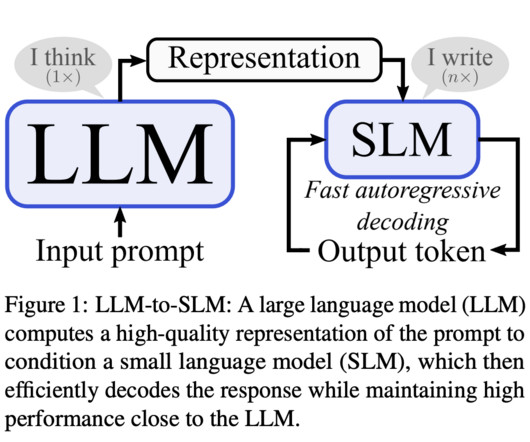

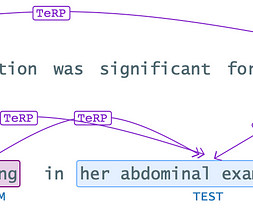

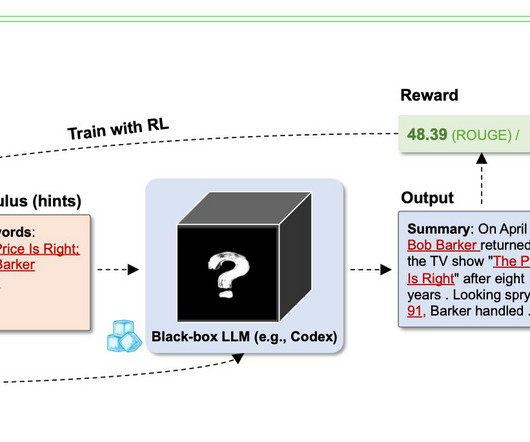

Introduction to Ludwig The development of Natural Language Machines (NLP) and Artificial Intelligence (AI) has significantly impacted the field. Ludwig, a low-code framework, is designed […] The post Ludwig: A Comprehensive Guide to LLM Fine Tuning using LoRA appeared first on Analytics Vidhya.

Let's personalize your content