Mistral AI Introduces Les Ministraux: Ministral 3B and Ministral 8B- Revolutionizing On-Device AI

Marktechpost

OCTOBER 16, 2024

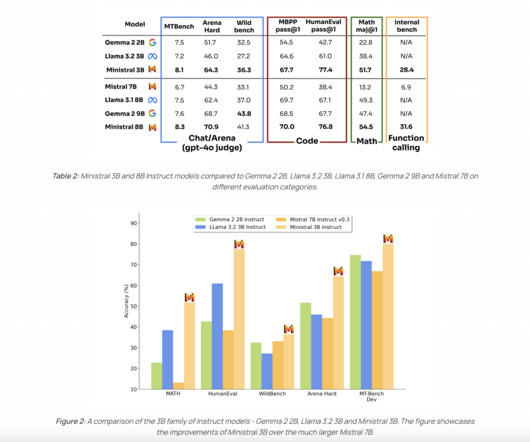

High-performance AI models that can run at the edge and on personal devices are needed to overcome the limitations of existing large-scale models. Introducing Ministral 3B and Ministral 8B Mistral AI recently unveiled two groundbreaking models aimed at transforming on-device and edge AI capabilities—Ministral 3B and Ministral 8B.

Let's personalize your content