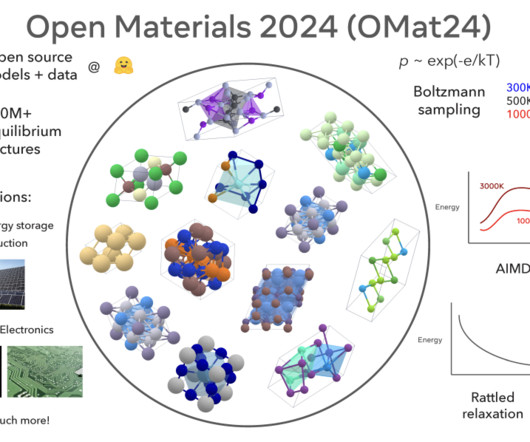

Meta AI Releases Meta’s Open Materials 2024 (OMat24) Inorganic Materials Dataset and Models

Marktechpost

OCTOBER 20, 2024

Researchers from Meta Fundamental AI Research (FAIR) have introduced the Open Materials 2024 (OMat24) dataset, which contains over 110 million DFT calculations, making it one of the largest publicly available datasets in this domain. If you like our work, you will love our newsletter.

Let's personalize your content