Top BERT Applications You Should Know About

Marktechpost

AUGUST 7, 2023

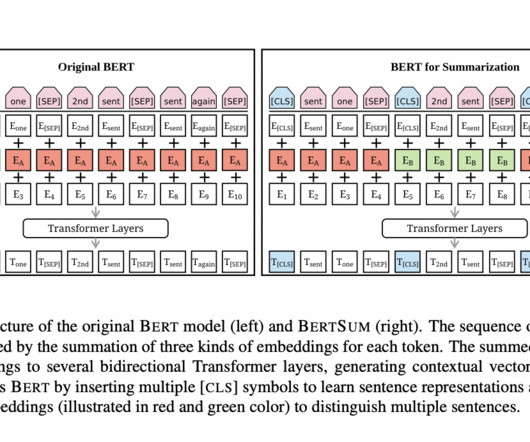

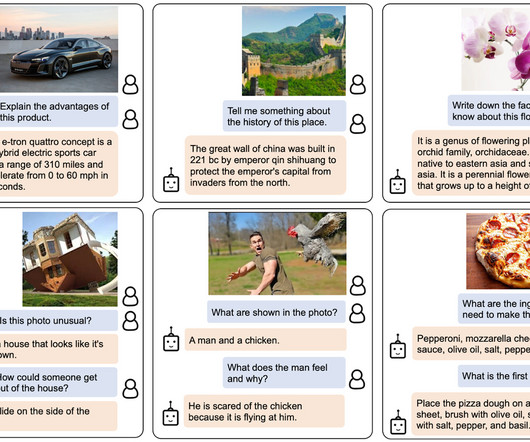

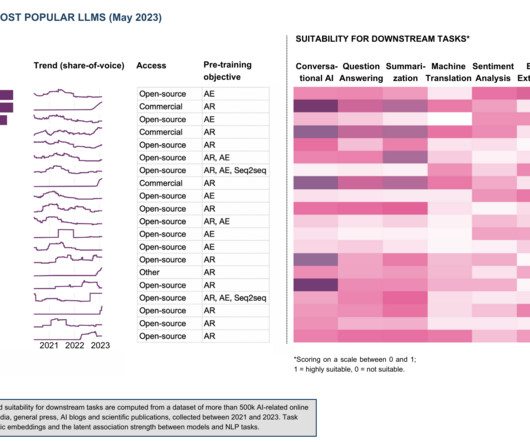

Models like GPT, BERT, and PaLM are getting popular for all the good reasons. The well-known model BERT, which stands for Bidirectional Encoder Representations from Transformers, has a number of amazing applications. Recent research investigates the potential of BERT for text summarization.

Let's personalize your content