Build an AI Research Assistant Using CrewAI and Composio

Analytics Vidhya

MAY 22, 2024

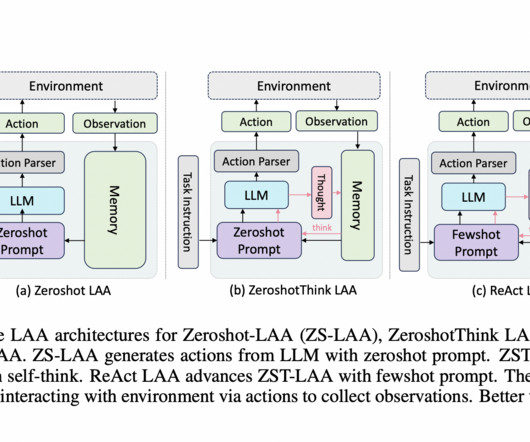

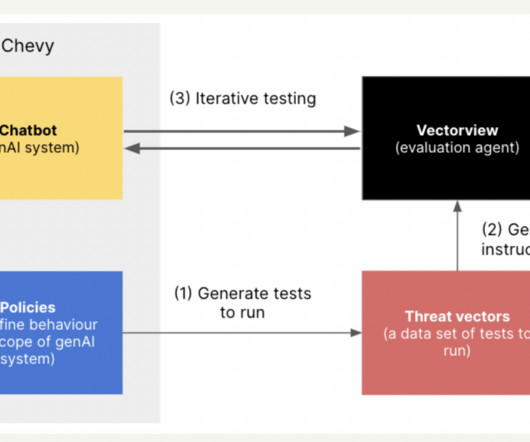

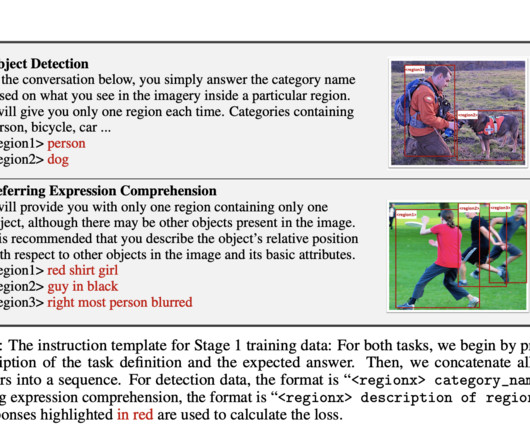

Introduction With every iteration of the LLM development, we are nearing the age of AI agents. On an enterprise […] The post Build an AI Research Assistant Using CrewAI and Composio appeared first on Analytics Vidhya.

Let's personalize your content