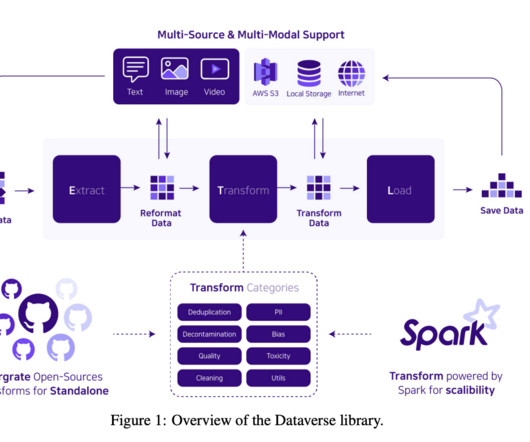

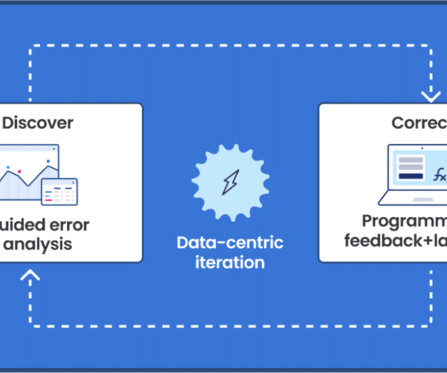

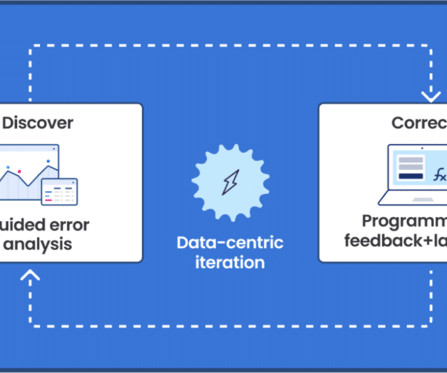

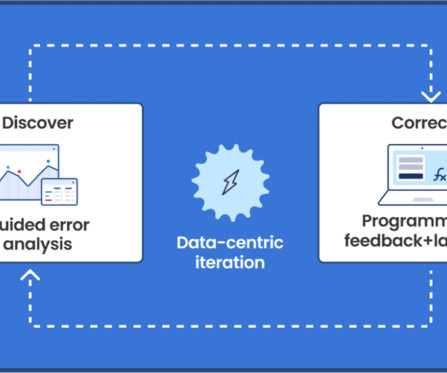

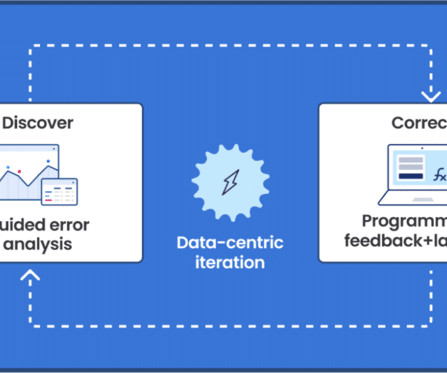

Upstage AI Introduces Dataverse for Addressing Challenges in Data Processing for Large Language Models

Marktechpost

APRIL 1, 2024

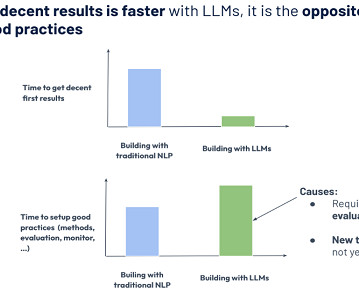

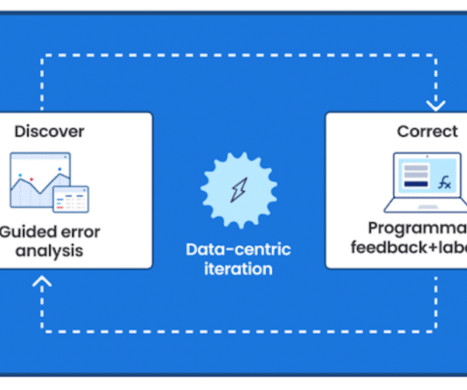

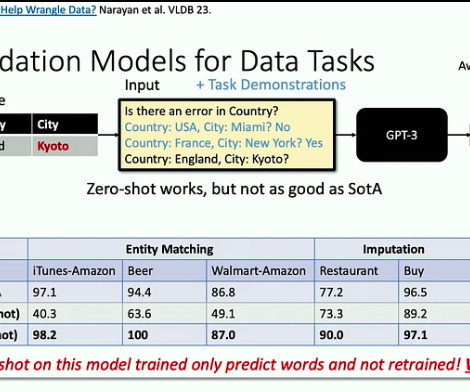

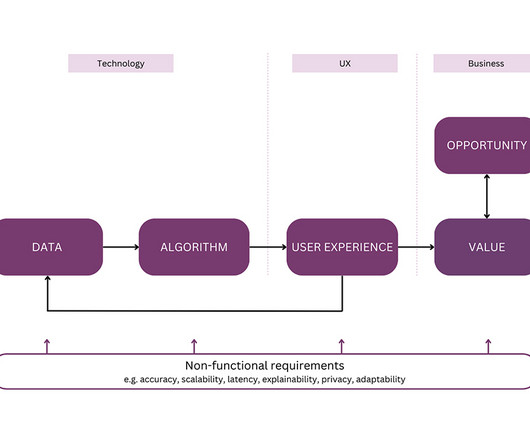

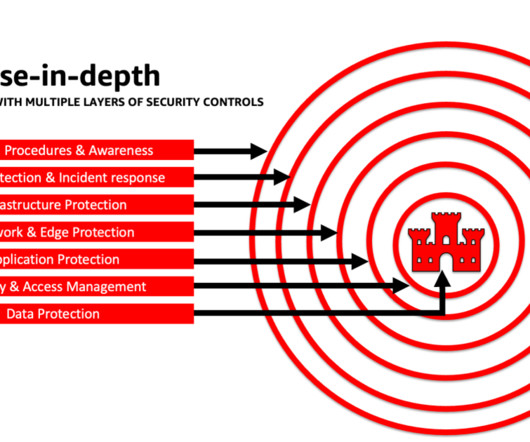

With the incorporation of large language models (LLMs) in almost all fields of technology, processing large datasets for language models poses challenges in terms of scalability and efficiency. If you like our work, you will love our newsletter.

Let's personalize your content