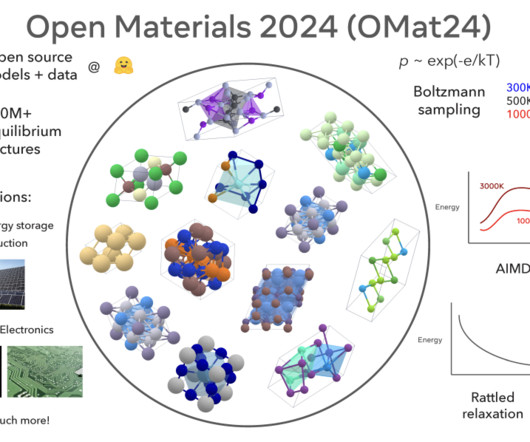

Meta AI Releases Meta’s Open Materials 2024 (OMat24) Inorganic Materials Dataset and Models

Marktechpost

OCTOBER 20, 2024

While AI has emerged as a powerful tool for materials discovery, the lack of publicly available data and open, pre-trained models has become a major bottleneck. The introduction of the OMat24 dataset and the corresponding models represents a significant leap forward in AI-assisted materials science.

Let's personalize your content