With Generative AI Advances, The Time to Tackle Responsible AI Is Now

Unite.AI

OCTOBER 20, 2023

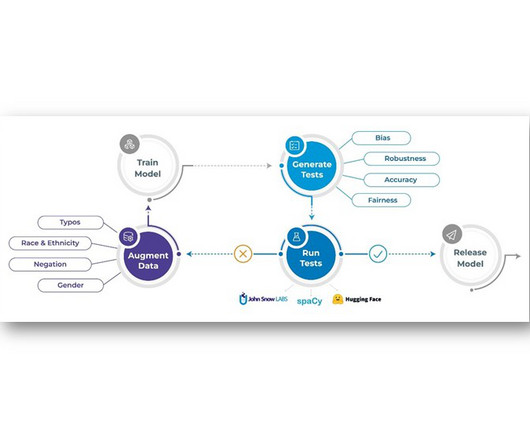

In 2022, companies had an average of 3.8 AI models in production. Today, seven in 10 companies are experimenting with generative AI, meaning that the number of AI models in production will skyrocket over the coming years. As a result, industry discussions around responsible AI have taken on greater urgency.

Let's personalize your content