AI News Weekly - Issue #343: Summer Fiction Reads about AI - Jul 27th 2023

AI Weekly

JULY 27, 2023

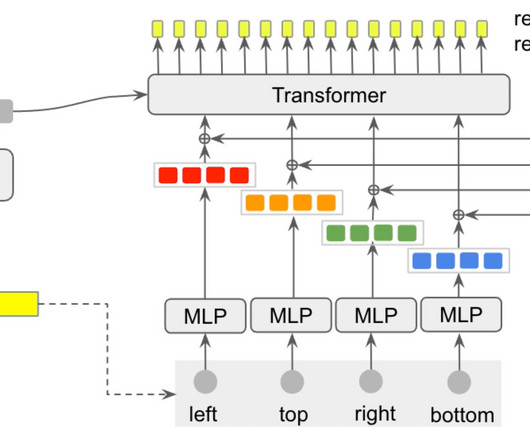

techcrunch.com The Essential Artificial Intelligence Glossary for Marketers (90+ Terms) BERT - Bidirectional Encoder Representations from Transformers (BERT) is Google’s deep learning model designed explicitly for natural language processing tasks like answering questions, analyzing sentiment, and translation.

Let's personalize your content