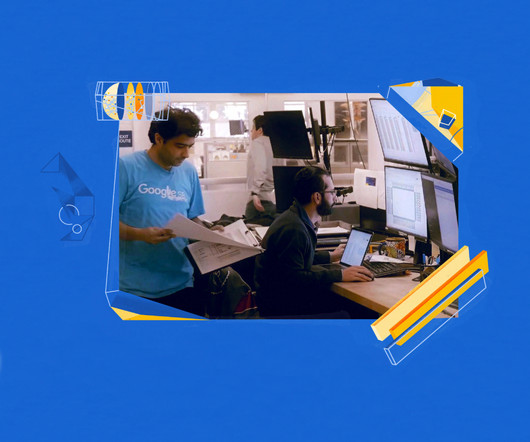

Google Research, 2022 & beyond: Algorithmic advances

Google Research AI blog

FEBRUARY 10, 2023

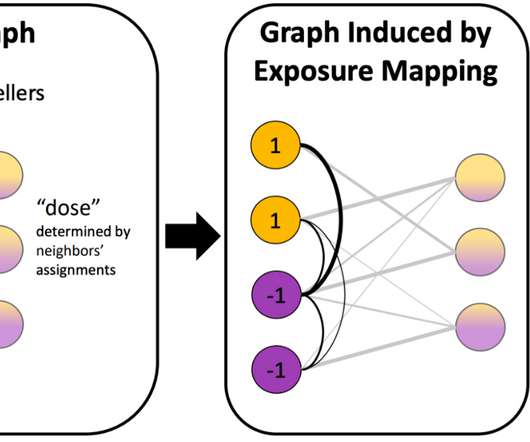

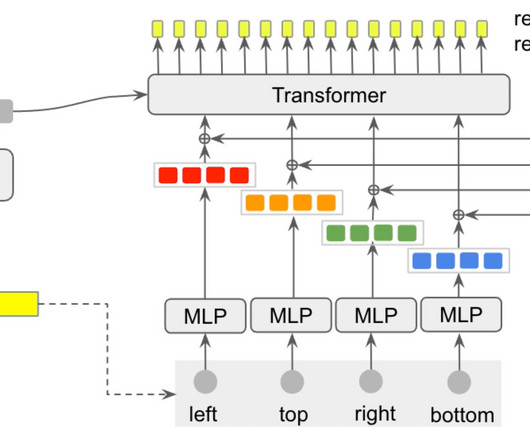

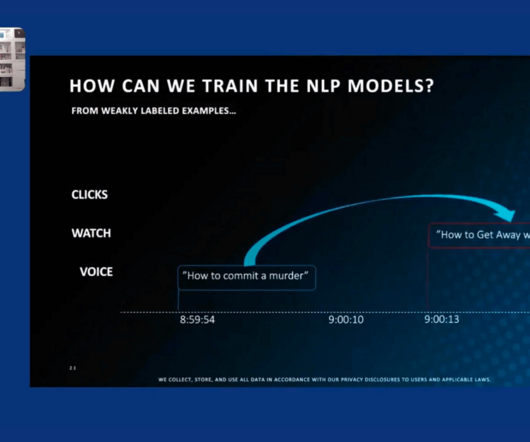

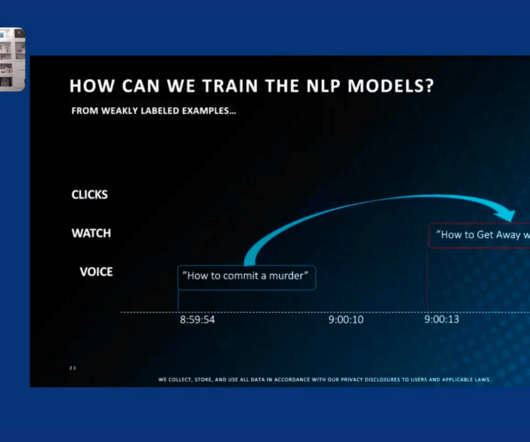

Robust algorithm design is the backbone of systems across Google, particularly for our ML and AI models. Hence, developing algorithms with improved efficiency, performance and speed remains a high priority as it empowers services ranging from Search and Ads to Maps and YouTube. You can find other posts in the series here.)

Let's personalize your content