Automated Fine-Tuning of LLAMA2 Models on Gradient AI Cloud

Analytics Vidhya

JANUARY 24, 2024

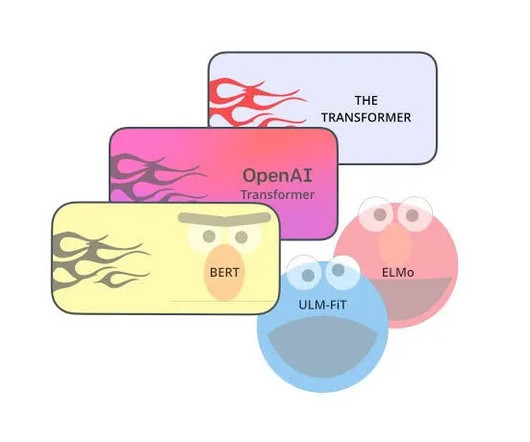

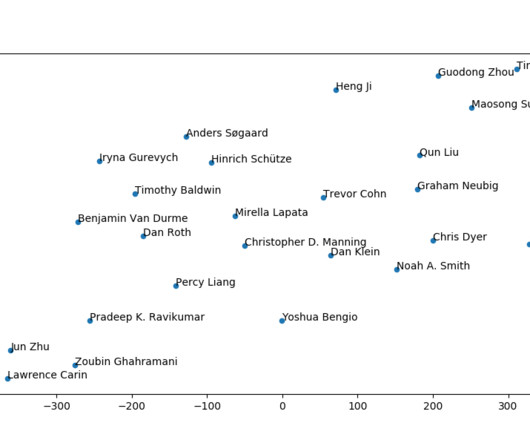

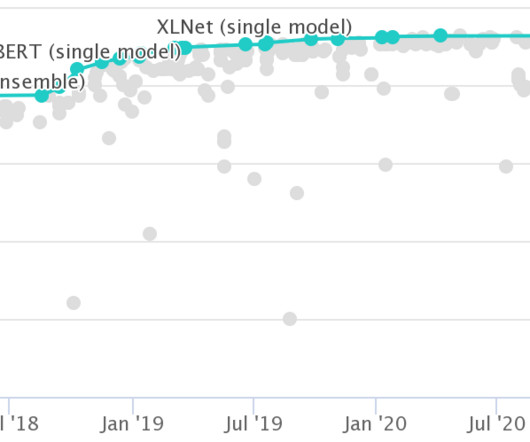

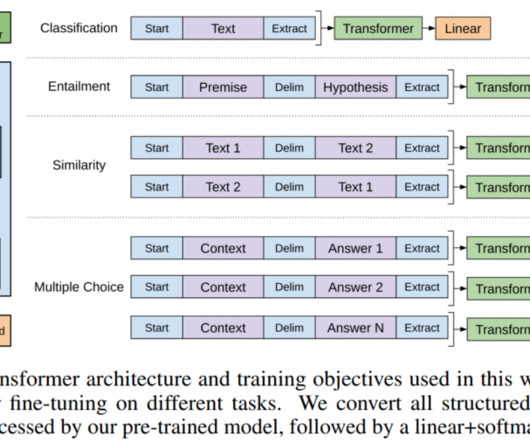

Introduction Welcome to the world of Large Language Models (LLM). However, in 2018, the “Universal Language Model Fine-tuning for Text Classification” paper changed the entire landscape of Natural Language Processing (NLP).

Let's personalize your content