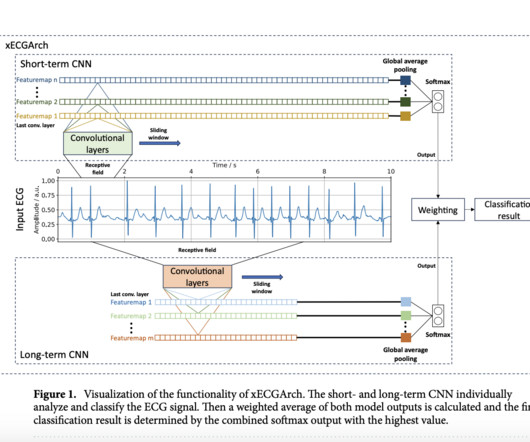

xECGArch: A Multi-Scale Convolutional Neural Network CNN for Accurate and Interpretable Atrial Fibrillation Detection in ECG Analysis

Marktechpost

JUNE 9, 2024

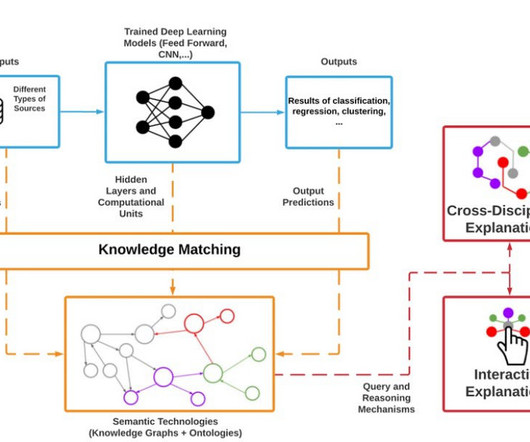

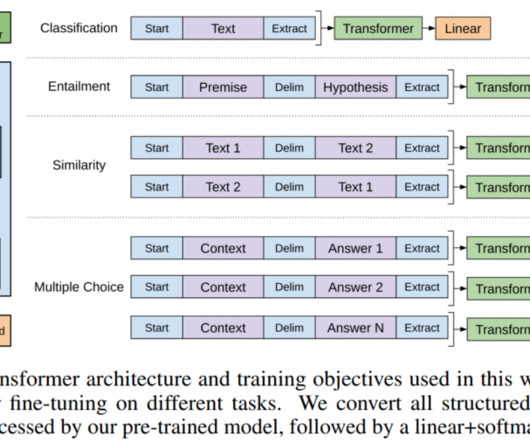

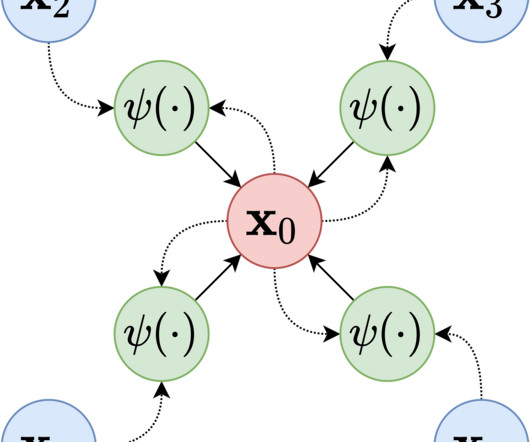

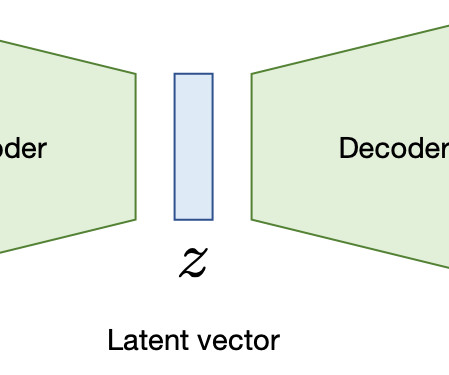

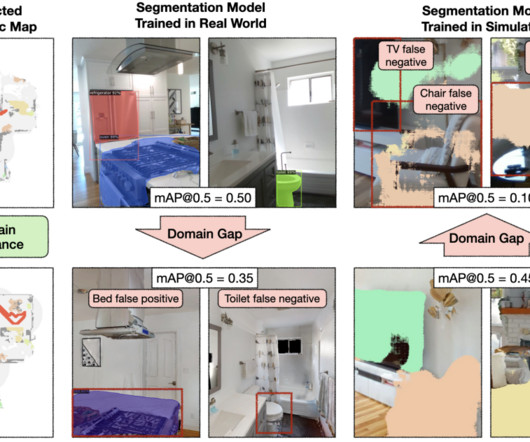

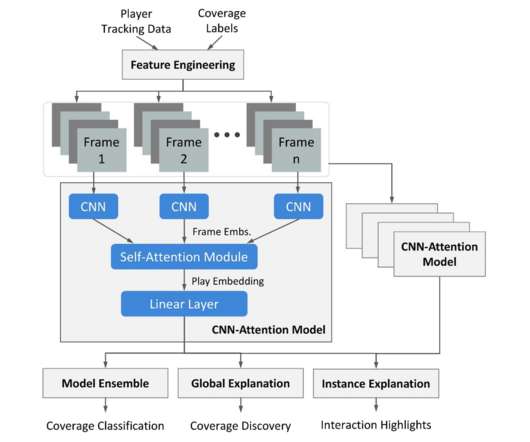

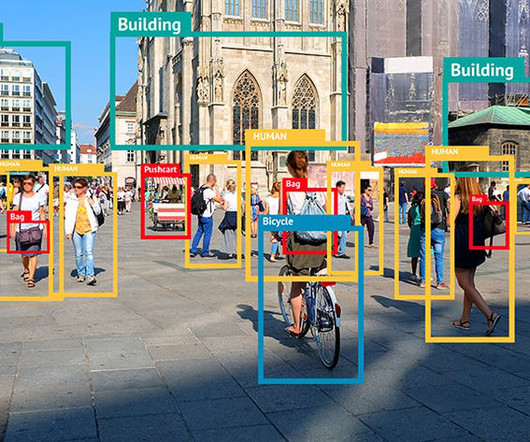

Explainable AI (xAI) methods, such as saliency maps and attention mechanisms, attempt to clarify these models by highlighting key ECG features. xECGArch uniquely separates short-term (morphological) and long-term (rhythmic) ECG features using two independent Convolutional Neural Networks CNNs.

Let's personalize your content