MIT Researchers Uncover New Insights into Brain-Auditory Connections with Advanced Neural Network Models

Marktechpost

DECEMBER 18, 2023

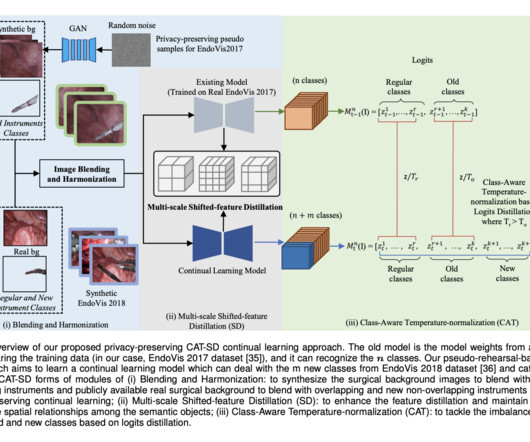

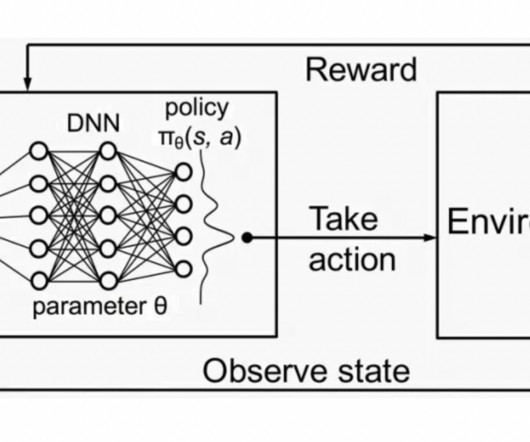

In a groundbreaking study, MIT researchers have delved into the realm of deep neural networks, aiming to unravel the mysteries of the human auditory system. The foundation of this research builds upon prior work where neural networks were trained to perform specific auditory tasks, such as recognizing words from audio signals.

Let's personalize your content