Inductive biases of neural network modularity in spatial navigation

ML @ CMU

JANUARY 2, 2025

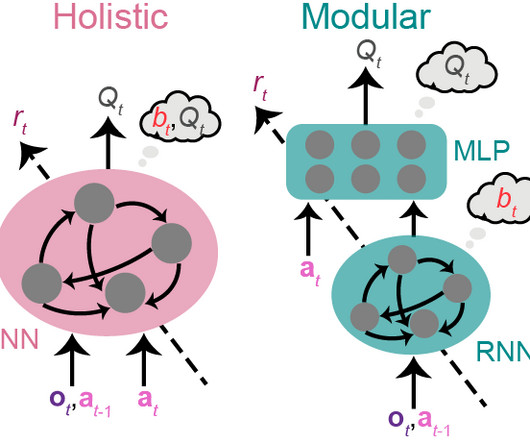

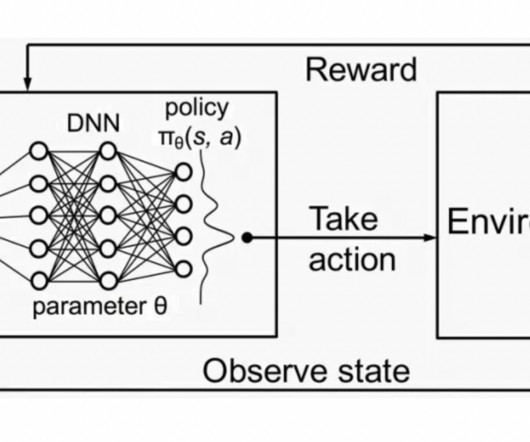

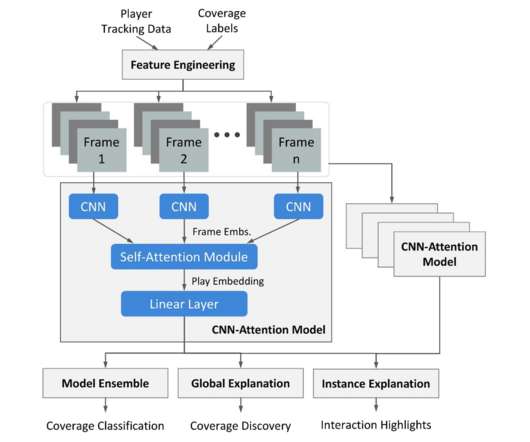

We use a model-free actor-critic approach to learning, with the actor and critic implemented using distinct neural networks. In practice, our algorithm is off-policy and incorporates mechanisms such as two critic networks and target networks as in TD3 ( fujimoto et al.,

Let's personalize your content