Modern NLP: A Detailed Overview. Part 2: GPTs

Towards AI

JULY 23, 2023

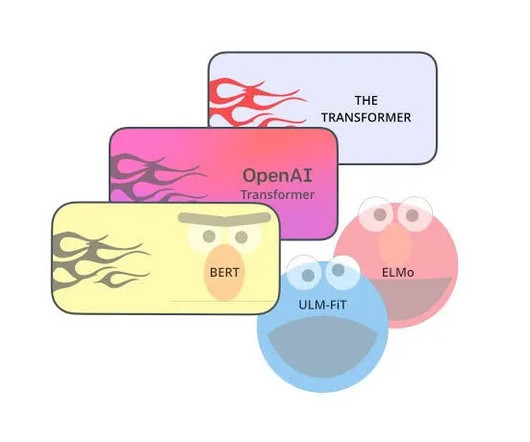

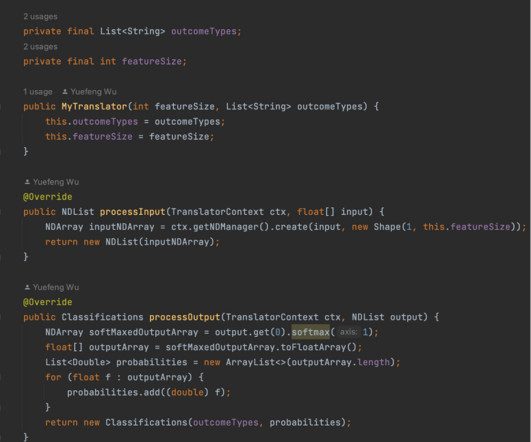

Year and work published Generative Pre-trained Transformer (GPT) In 2018, OpenAI introduced GPT, which has shown, with the implementation of pre-training, transfer learning, and proper fine-tuning, transformers can achieve state-of-the-art performance. Basically, it predicts a word with the context of the previous word.

Let's personalize your content