DeepMind AI Supercharges YouTube Shorts Exposure by Auto-Generating Descriptions for Millions of Videos

Marktechpost

AUGUST 1, 2023

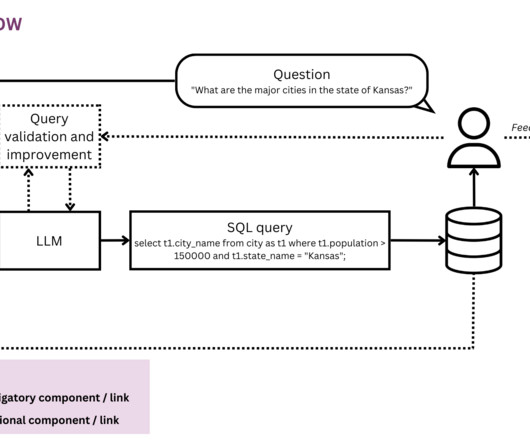

” This generated text is stored as metadata, enabling more efficient video classification and facilitating search engine accessibility. Additionally, DeepMind and YouTube teamed up in 2018 to educate video creators on maximizing revenue by aligning advertisements with YouTube’s policies.

Let's personalize your content