A Gentle Introduction to GPTs

Mlearning.ai

FEBRUARY 19, 2023

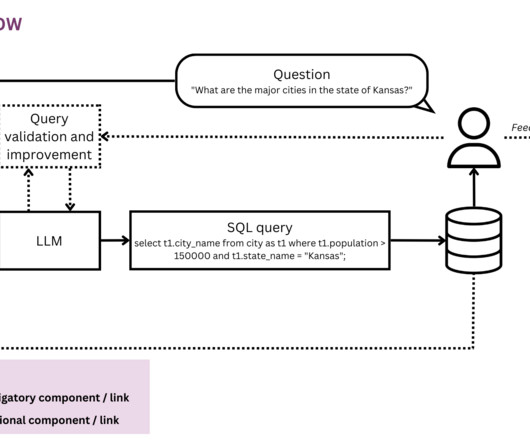

Along with text generation it can also be used to text classification and text summarization. The auto-complete feature on your smartphone is based on this principle. When you type “how”, the auto-complete will suggest words like “to” or “are”. That’s the precise difference between GPT-3 and its predecessors.

Let's personalize your content