Understanding Transformers: A Deep Dive into NLP’s Core Technology

Analytics Vidhya

APRIL 16, 2024

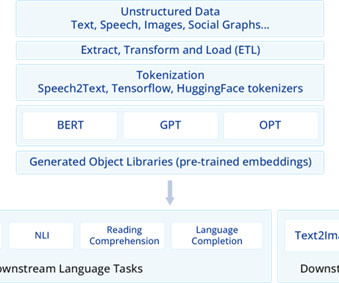

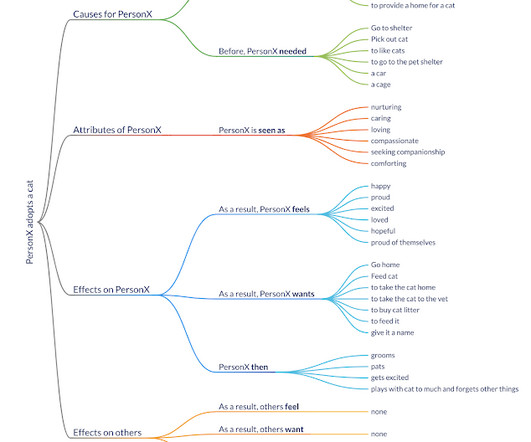

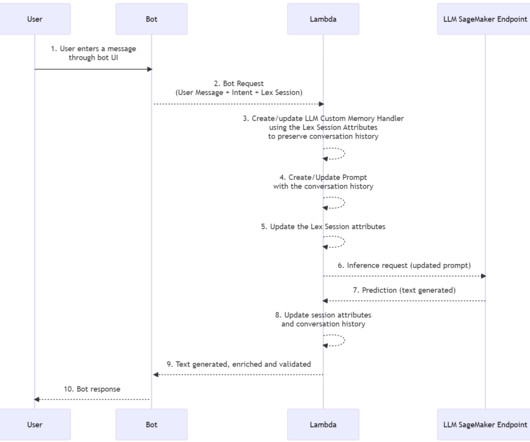

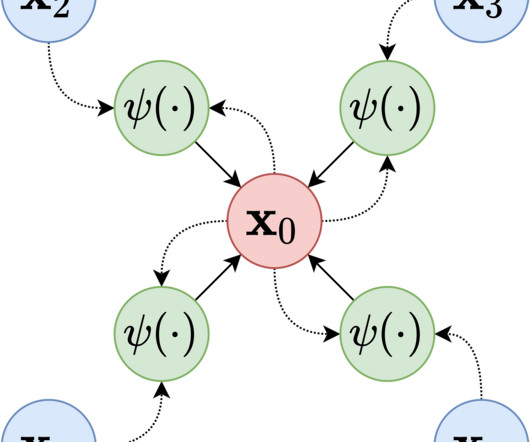

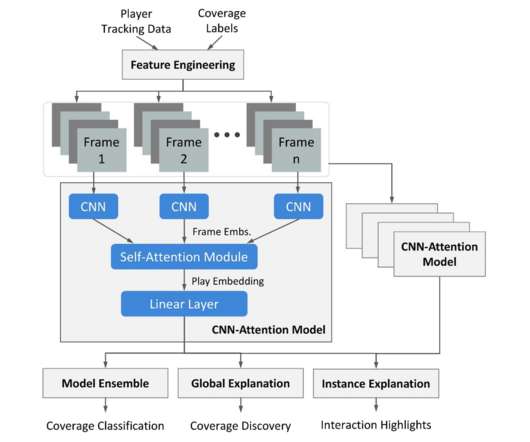

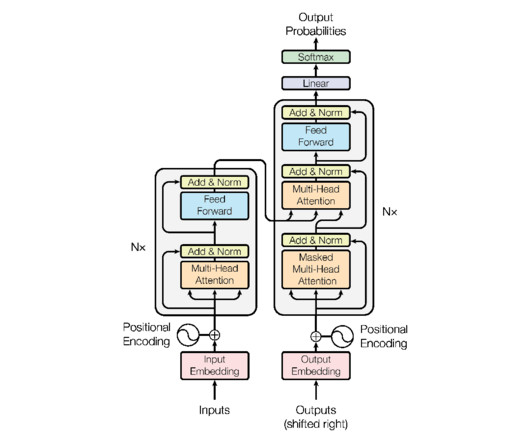

Introduction Welcome into the world of Transformers, the deep learning model that has transformed Natural Language Processing (NLP) since its debut in 2017.

Let's personalize your content