NLP Rise with Transformer Models | A Comprehensive Analysis of T5, BERT, and GPT

Unite.AI

NOVEMBER 8, 2023

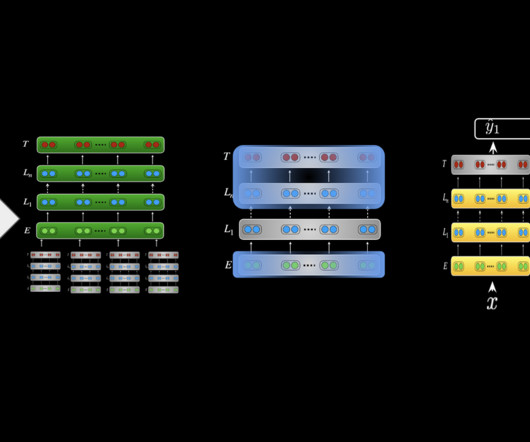

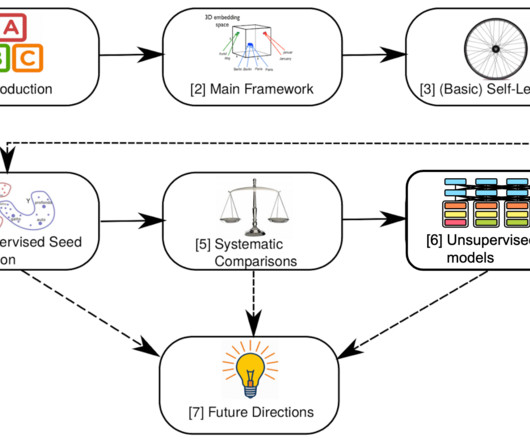

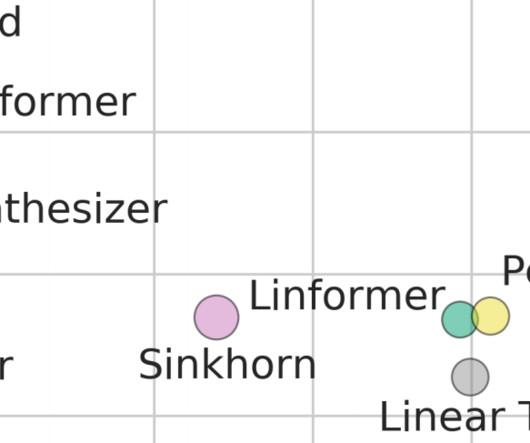

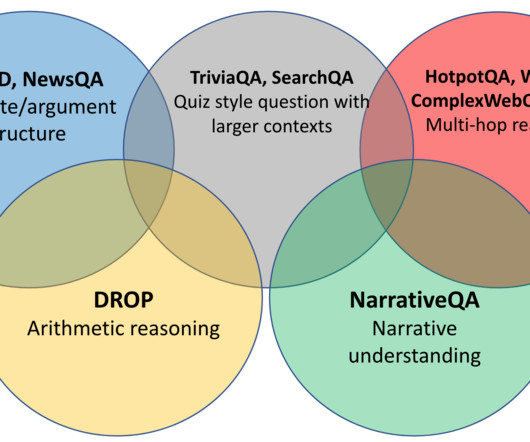

Natural Language Processing (NLP) has experienced some of the most impactful breakthroughs in recent years, primarily due to the the transformer architecture. BERT T5 (Text-to-Text Transfer Transformer) : Introduced by Google in 2020 , T5 reframes all NLP tasks as a text-to-text problem, using a unified text-based format.

Let's personalize your content