NLP Rise with Transformer Models | A Comprehensive Analysis of T5, BERT, and GPT

Unite.AI

NOVEMBER 8, 2023

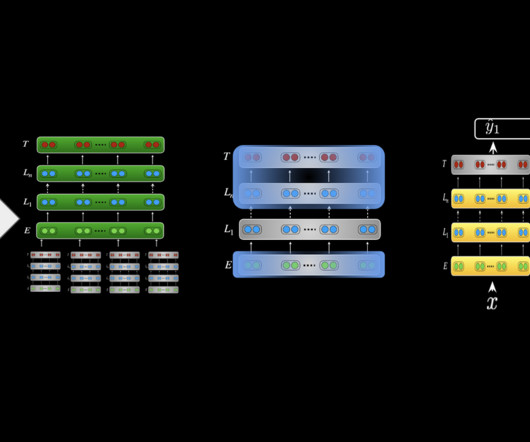

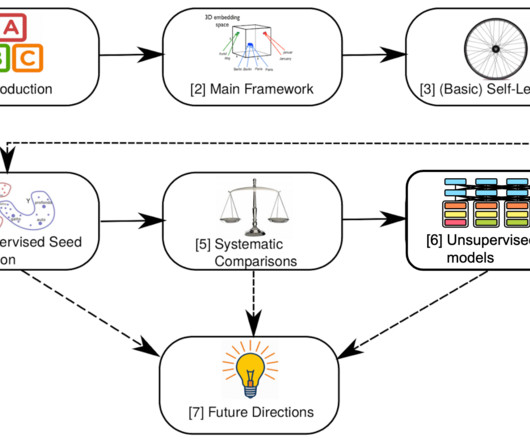

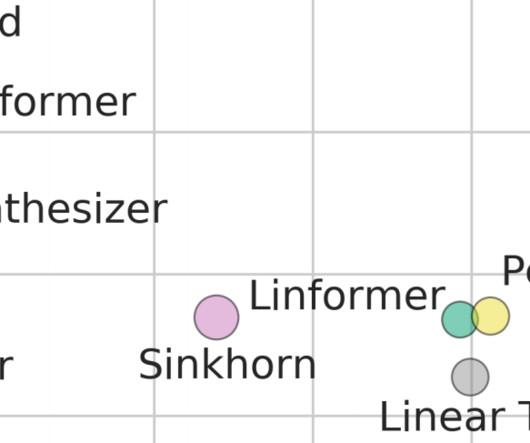

Developed by a team at Google led by Tomas Mikolov in 2013, Word2Vec represented words in a dense vector space, capturing syntactic and semantic word relationships based on their context within large corpora of text. GPT Architecture Here's a more in-depth comparison of the T5, BERT, and GPT models across various dimensions: 1.

Let's personalize your content