The Evolution of ImageNet and Its Applications

Viso.ai

FEBRUARY 11, 2024

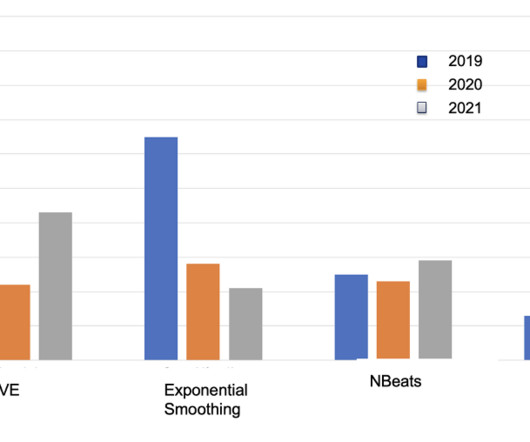

It is a technique used in computer vision to identify and categorize the main content (objects) in a photo or video. 2011 – A good ILSVRC image classification error rate is 25%. 2012 – A deep convolutional neural net called AlexNet achieves a 16% error rate. parameters, achieving an accuracy of around 84%.

Let's personalize your content