Unlock ML insights using the Amazon SageMaker Feature Store Feature Processor

AWS Machine Learning Blog

SEPTEMBER 19, 2023

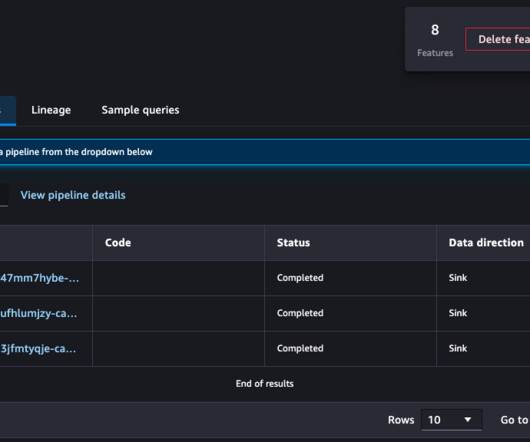

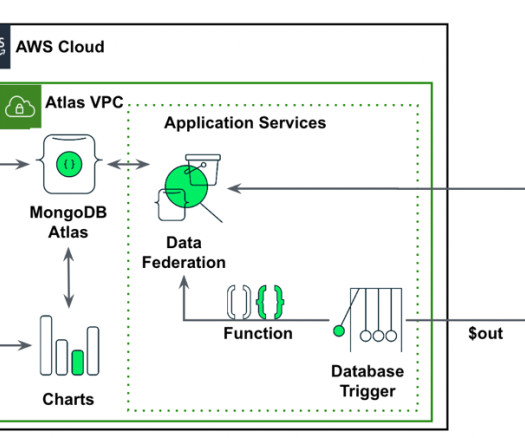

Amazon SageMaker Feature Store provides an end-to-end solution to automate feature engineering for machine learning (ML). For many ML use cases, raw data like log files, sensor readings, or transaction records need to be transformed into meaningful features that are optimized for model training. SageMaker Studio set up.

Let's personalize your content