Top Artificial Intelligence AI Courses from Google

Marktechpost

MAY 30, 2024

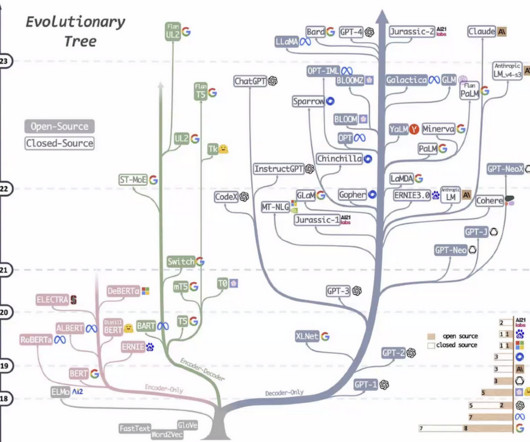

Introduction to AI and Machine Learning on Google Cloud This course introduces Google Cloud’s AI and ML offerings for predictive and generative projects, covering technologies, products, and tools across the data-to-AI lifecycle. It covers how to develop NLP projects using neural networks with Vertex AI and TensorFlow.

Let's personalize your content