Prompt Engineering for Game Development

Analytics Vidhya

JUNE 24, 2024

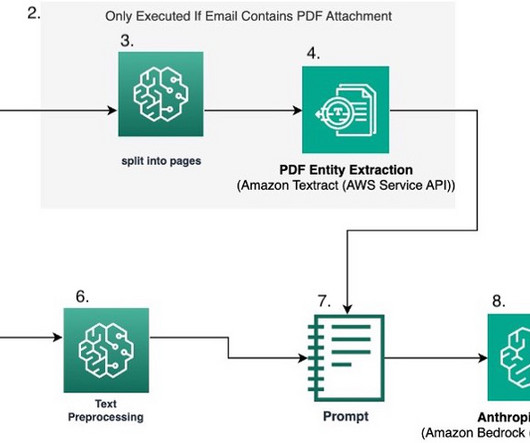

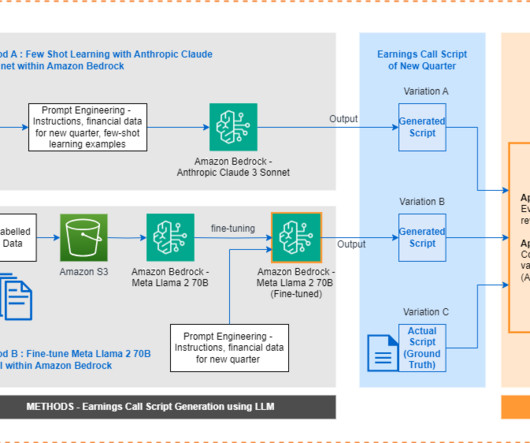

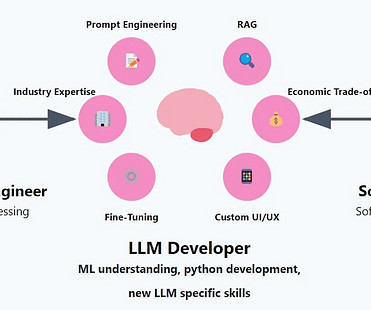

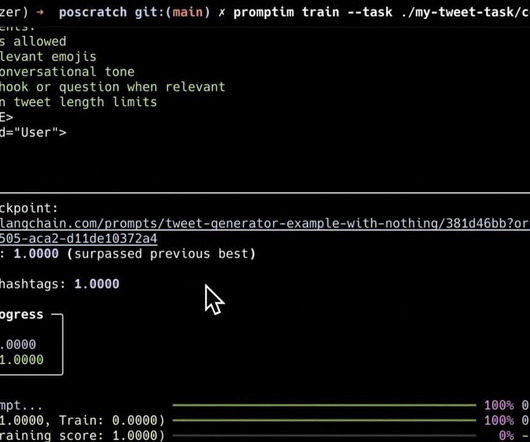

Introduction The gaming industry is quickly changing, and integrating AI with creative design has resulted in prompt engineering. Prompt engineering is more than simply directing an AI; it’s […] The post Prompt Engineering for Game Development appeared first on Analytics Vidhya.

Let's personalize your content