Autoencoder in Computer Vision – Complete 2023 Guide

Viso.ai

MARCH 7, 2023

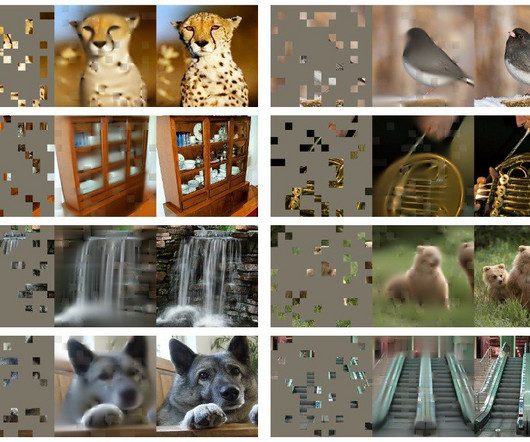

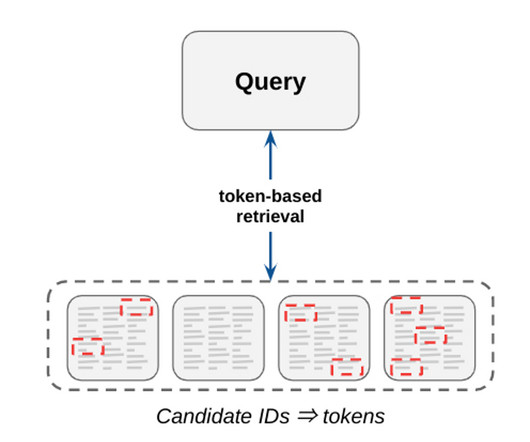

Autoencoders are a powerful tool used in machine learning for feature extraction, data compression, and image reconstruction. Explanation and Definition of Autoencoders Autoencoders are neural networks that can learn to compress and reconstruct input data, such as images, using a hidden layer of neurons.

Let's personalize your content