How Large Language Models Are Unveiling the Mystery of ‘Blackbox’ AI

Unite.AI

DECEMBER 19, 2024

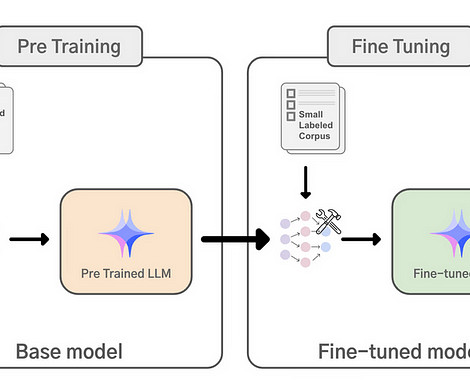

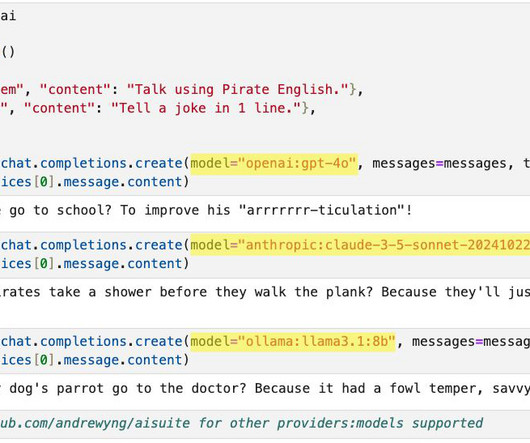

Large Language Models (LLMs) are changing how we interact with AI. LLMs are helping us connect the dots between complicated machine-learning models and those who need to understand them. Conclusion Large Language Models are making AI more explainable and accessible to everyone.

Let's personalize your content