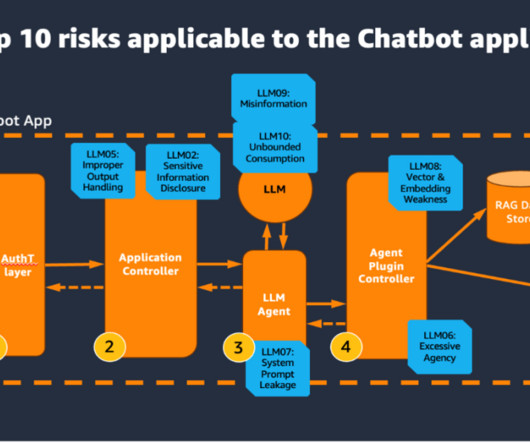

Secure a generative AI assistant with OWASP Top 10 mitigation

JANUARY 24, 2025

Contrast that with Scope 4/5 applications, where not only do you build and secure the generative AI application yourself, but you are also responsible for fine-tuning and training the underlying large language model (LLM). LLM and LLM agent The LLM provides the core generative AI capability to the assistant.

Let's personalize your content