5 Steps to Implement AI-Powered Threat Detection in Your Business

Aiiot Talk

APRIL 23, 2024

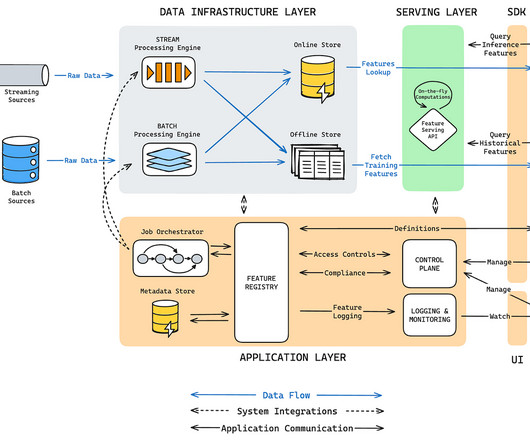

Fortunately, AI is transforming threat detection. With AI in your corner, you can significantly bolster your defense and make your digital environment more secure. With AI in your corner, you can significantly bolster your defense and make your digital environment more secure.

Let's personalize your content