Narrowing the confidence gap for wider AI adoption

AI News

DECEMBER 9, 2024

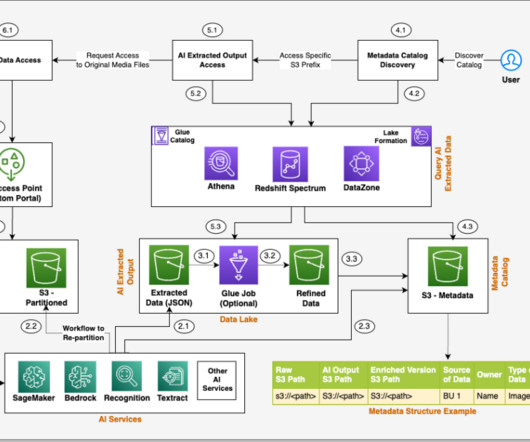

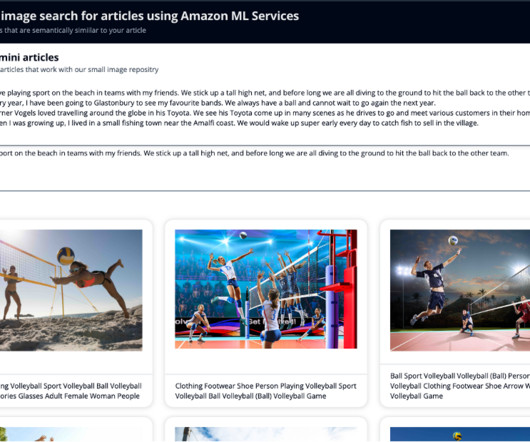

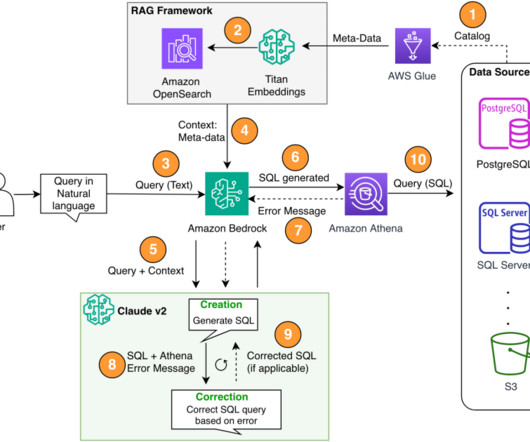

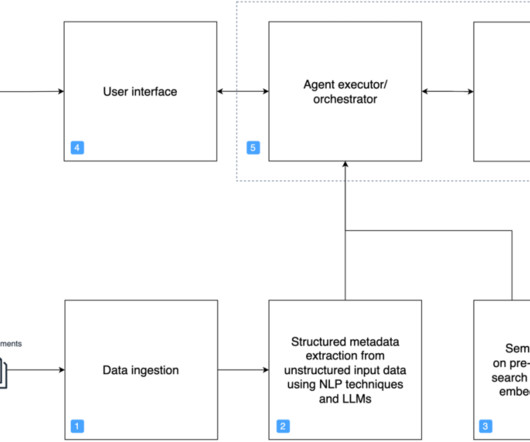

Avi Perez, CTO of Pyramid Analytics, explained that his business intelligence software’s AI infrastructure was deliberately built to keep data away from the LLM , sharing only metadata that describes the problem and interfacing with the LLM as the best way for locally-hosted engines to run analysis.”There’s

Let's personalize your content