easy-explain: Explainable AI for YoloV8

Towards AI

FEBRUARY 3, 2024

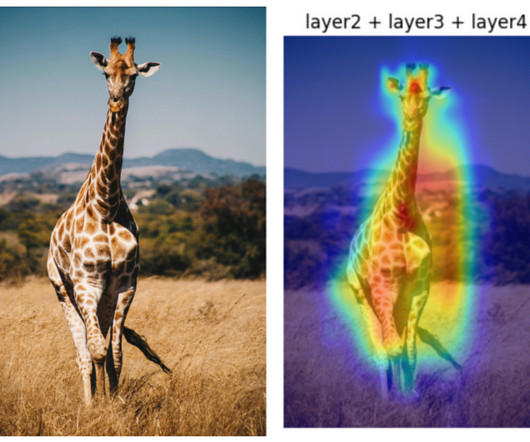

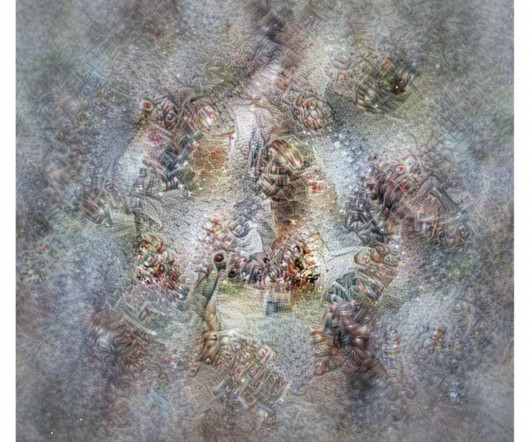

(Left) Photo by Pawel Czerwinski on Unsplash U+007C (Right) Unsplash Image adjusted by the showcased algorithm Introduction It’s been a while since I created this package ‘easy-explain’ and published on Pypi. A few weeks ago, I needed an explainability algorithm for a YoloV8 model. The truth is, I couldn’t find anything.

Let's personalize your content