Building the Same App across Various Web Frameworks

Eugene Yan

SEPTEMBER 7, 2024

Comparing five implementations built with FastAPI, FastHTML, Next.

Eugene Yan

SEPTEMBER 7, 2024

Comparing five implementations built with FastAPI, FastHTML, Next.

Unite.AI

SEPTEMBER 7, 2024

A recent study from the University of California, Merced, has shed light on a concerning trend: our tendency to place excessive trust in AI systems, even in life-or-death situations. As AI continues to permeate various aspects of our society, from smartphone assistants to complex decision-support systems, we find ourselves increasingly relying on these technologies to guide our choices.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Marktechpost

SEPTEMBER 7, 2024

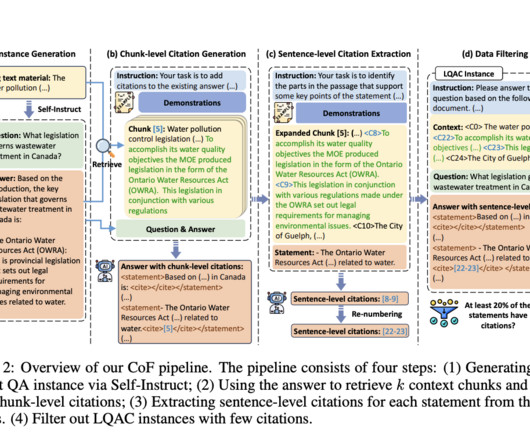

Hallucination is a phenomenon where large language models (LLMs) produce responses that are not grounded in reality or do not align with the provided context, generating incorrect, misleading, or nonsensical information. These errors can have serious consequences, particularly in applications that require high precision, like medical diagnosis, legal advice, or other high-stakes scenarios.

Marktechpost

SEPTEMBER 7, 2024

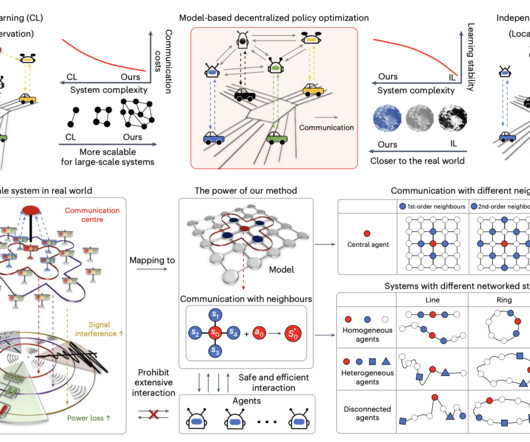

The primary challenge in scaling large-scale AI systems is achieving efficient decision-making while maintaining performance. Distributed AI, particularly multi-agent reinforcement learning (MARL), offers potential by decomposing complex tasks and distributing them across collaborative nodes. However, real-world applications face limitations due to high communication and data requirements.

Speaker: David Warren and Kevin O'Neill Stoll

Transitioning to a usage-based business model offers powerful growth opportunities but comes with unique challenges. How do you validate strategies, reduce risks, and ensure alignment with customer value? Join us for a deep dive into designing effective pilots that test the waters and drive success in usage-based revenue. Discover how to develop a pilot that captures real customer feedback, aligns internal teams with usage metrics, and rethinks sales incentives to prioritize lasting customer eng

Marktechpost

SEPTEMBER 7, 2024

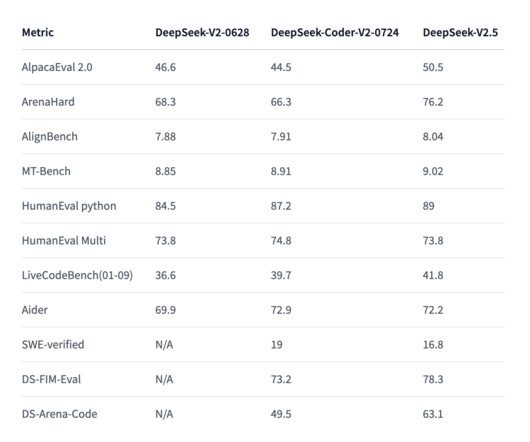

DeepSeek-AI has released DeepSeek-V2.5 , a powerful Mixture of Experts (MOE) model with 238 billion parameters, featuring 160 experts and 16 billion active parameters for optimized performance. The model excels in chat and coding tasks, with cutting-edge capabilities such as function calls, JSON output generation, and Fill-in-the-Middle (FIM) completion.

Artificial Intelligence Zone brings together the best content for AI and ML professionals from the widest variety of thought leaders.

Marktechpost

SEPTEMBER 7, 2024

Large language models (LLMs) have become fundamental tools for tasks such as question-answering (QA) and text summarization. These models excel at processing long and complex texts, with capacities reaching over 100,000 tokens. As LLMs are popular for handling large-context tasks, ensuring their reliability and accuracy becomes more pressing. Users rely on LLMs to sift through vast information and provide concise, correct answers.

Marktechpost

SEPTEMBER 7, 2024

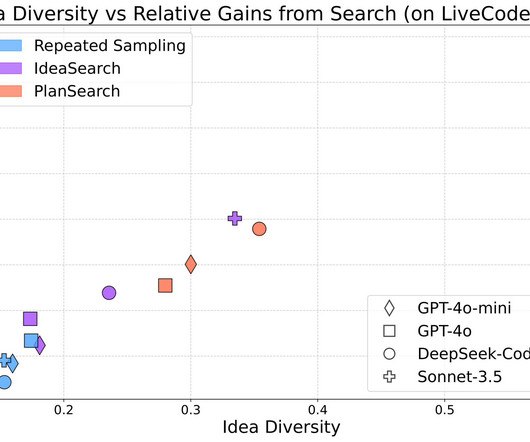

Large language models (LLMs) have significantly progressed in various domains, including natural language understanding and code generation. These models can generate coherent text and solve complex tasks. However, LLMs face challenges when applied to more specialized areas such as competitive programming and code generation. This field focuses on improving the models’ ability to generate diverse, accurate solutions to coding problems, using computational power more effectively during inference.

Marktechpost

SEPTEMBER 7, 2024

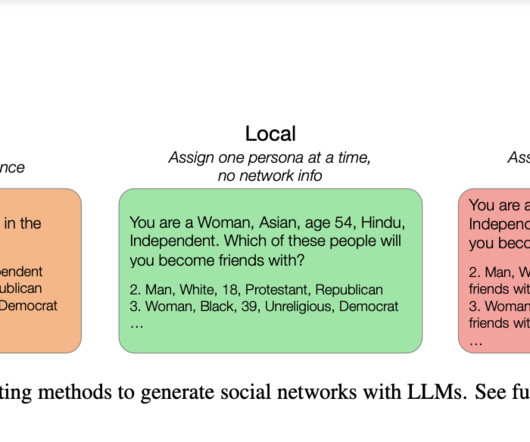

Social network generation finds numerous applications in various fields, such as epidemic modeling, social media simulations, and understanding social phenomena like polarization. Creating realistic social networks is crucial when real networks cannot be directly observed due to privacy concerns or other constraints. These generated networks are vital for accurately modeling interactions and predicting outcomes in these contexts.

Marktechpost

SEPTEMBER 7, 2024

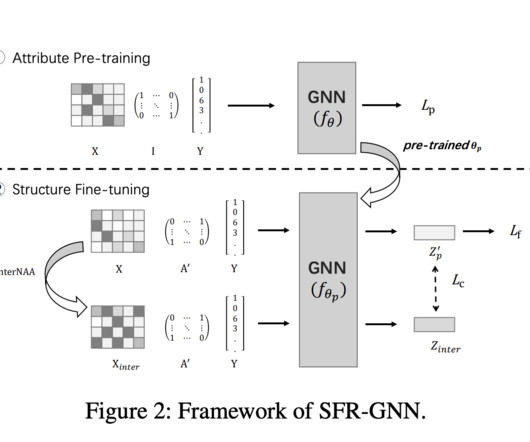

Graph neural networks (GNNs) have emerged as powerful tools for capturing complex interactions in real-world entities and finding applications across various business domains. These networks excel at generating effective graph entity embeddings by encoding both node features and structural insights, making them invaluable for numerous downstream tasks.

Advertisement

Many software teams have migrated their testing and production workloads to the cloud, yet development environments often remain tied to outdated local setups, limiting efficiency and growth. This is where Coder comes in. In our 101 Coder webinar, you’ll explore how cloud-based development environments can unlock new levels of productivity. Discover how to transition from local setups to a secure, cloud-powered ecosystem with ease.

Marktechpost

SEPTEMBER 7, 2024

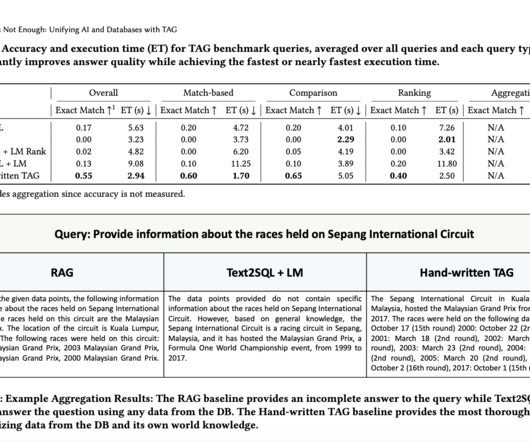

Artificial intelligence (AI) and database management systems have increasingly converged, with significant potential to improve how users interact with large datasets. Recent advancements aim to allow users to pose natural language questions directly to databases and retrieve detailed, complex answers. However, current tools are limited in addressing real-world demands.

Marktechpost

SEPTEMBER 7, 2024

The paper “MemLong: Memory-Augmented Retrieval for Long Text Modeling” addresses a critical limitation regarding the ability to process long contexts in the field of Large Language Models (LLMs). While LLMs have shown remarkable success in various applications, they struggle with long-sequence tasks due to traditional attention mechanisms’ quadratic time and space complexity.

Marktechpost

SEPTEMBER 7, 2024

Graph Neural Networks (GNNs) have emerged as the leading approach for graph learning tasks across various domains, including recommender systems, social networks, and bioinformatics. However, GNNs have shown vulnerability to adversarial attacks, particularly structural attacks that modify graph edges. These attacks pose significant challenges in scenarios where attackers have limited access to entity relationships.

Marktechpost

SEPTEMBER 7, 2024

Mixture-of-experts (MoE) architectures are becoming significant in the rapidly developing field of Artificial Intelligence (AI), allowing for the creation of systems that are more effective, scalable, and adaptable. MoE optimizes computing power and resource utilization by employing a system of specialized sub-models, or experts, that are selectively activated based on the input data.

Advertisement

Large enterprises face unique challenges in optimizing their Business Intelligence (BI) output due to the sheer scale and complexity of their operations. Unlike smaller organizations, where basic BI features and simple dashboards might suffice, enterprises must manage vast amounts of data from diverse sources. What are the top modern BI use cases for enterprise businesses to help you get a leg up on the competition?

Let's personalize your content