Reflections on AI Engineer Summit 2023

Eugene Yan

OCTOBER 14, 2023

The biggest deployment challenges, backward compatibility, multi-modality, and SF work ethic.

Eugene Yan

OCTOBER 14, 2023

The biggest deployment challenges, backward compatibility, multi-modality, and SF work ethic.

Unite.AI

OCTOBER 14, 2023

As we move towards a future where artificial intelligence is becoming an integral part of our lives, WellSaid Labs is one such AI voice generator that has caught people's attention. With 50 realistic AI voices and precise speech editing capabilities, it has become a game-changer in the world of voiceovers. In this WellSaid Labs review, we will discover what WellSaid Labs is all about and explore its various use cases.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Eugene Yan

OCTOBER 14, 2023

The biggest deployment challenges, backward compatibility, multi-modality, and SF work ethic.

Mlearning.ai

OCTOBER 14, 2023

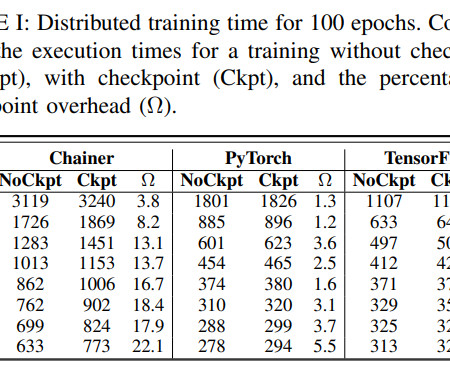

Introduction Deep learning tasks usually demand high computation/memory requirements and their computations are embarrassingly parallel. In this way, high-performance computing (HPC) systems are a good target for them. The paper claims that distributed training has been facilitated by deep learning frameworks, but fault tolerance did not get enough attention.

Speaker: Carolyn Clark and Miriam Connaughton

Forget predictions, let’s focus on priorities for the year and explore how to supercharge your employee experience. Join Miriam Connaughton and Carolyn Clark as they discuss key HR trends for 2025—and how to turn them into actionable strategies for your organization. In this dynamic webinar, our esteemed speakers will share expert insights and practical tips to help your employee experience adapt and thrive.

ODSC - Open Data Science

OCTOBER 14, 2023

In the spirit of open data and growing the data science and AI community, we are thrilled to announce that we are now offering a free ODSC Open Pass, both for in-person and virtual during ODSC West this October 30th to November 2nd. For those of you who haven’t attended an ODSC Conference before, this is the perfect way to dip your toes in and get a feel for what we are all about.

Bugra Akyildiz

OCTOBER 14, 2023

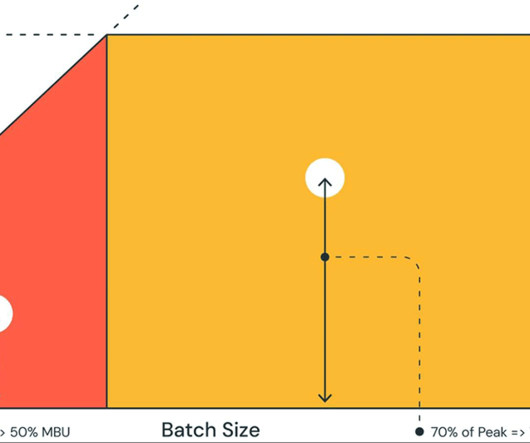

Articles Databricks wrote up a rather long article on how to optimize LLM inference to make the inference more efficient and introduce less latency. Some of the important points from this article are that memory bandwidth is key for LLM inference and that batching is critical for achieving high throughput. Some of the challenges that post goes in detail: Memory bandwidth: LLMs are very large models, and they can require a lot of memory to run.

Let's personalize your content