Mora: An Open Source Alternative to Sora

Analytics Vidhya

MARCH 28, 2024

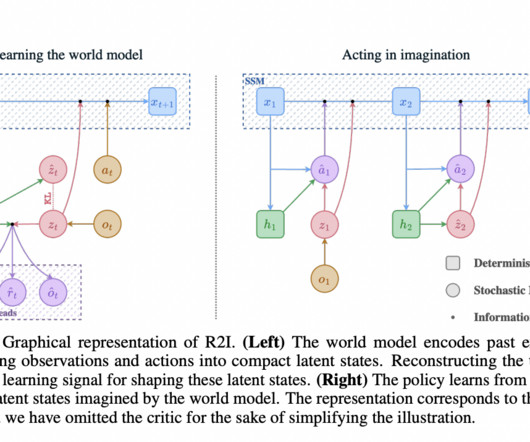

Introduction Generative AI, in its essence, is like a wizard’s cauldron, brewing up images, text, and now videos from a set of ingredients known as data. The magic lies in its ability to learn from this data and generate new, previously unseen content strikingly similar to the real thing. Image generation models like DALL-E have […] The post Mora: An Open Source Alternative to Sora appeared first on Analytics Vidhya.

Let's personalize your content