Effective Prompting with A Handful of Fundamentals

Eugene Yan

MAY 25, 2024

Description of post here (150 chars)

Eugene Yan

MAY 25, 2024

Description of post here (150 chars)

Unite.AI

MAY 25, 2024

The hospitality industry has grappled with a severe labor shortage since the COVID-19 pandemic. As businesses struggle to find enough workers to meet the growing demand, many have turned to robotic technology as a potential solution. However, a recent study conducted by Washington State University suggests that the introduction of robots in the workplace may inadvertently exacerbate the labor shortage due to a phenomenon known as “robot-phobia” among hospitality workers.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Marktechpost

MAY 25, 2024

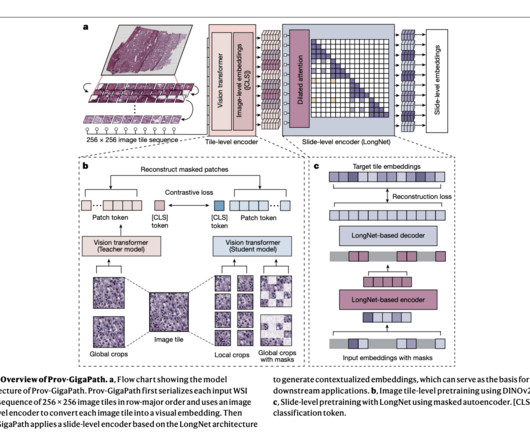

Digital pathology converts traditional glass slides into digital images for viewing, analysis, and storage. Advances in imaging technology and software drive this transformation, which has significant implications for medical diagnostics, research, and education. There is a chance to speed up advancements in precision health by a factor of ten because of the present generative AI revolution and the parallel digital change in biomedicine.

Artificial Lawyer

MAY 25, 2024

Following a request for comment on the controversial study ‘Hallucination-Free? Assessing the Reliability of Leading AI Legal Research Tools’, conducted by the Human-Centred AI group.

Speaker: David Warren and Kevin O'Neill Stoll

Transitioning to a usage-based business model offers powerful growth opportunities but comes with unique challenges. How do you validate strategies, reduce risks, and ensure alignment with customer value? Join us for a deep dive into designing effective pilots that test the waters and drive success in usage-based revenue. Discover how to develop a pilot that captures real customer feedback, aligns internal teams with usage metrics, and rethinks sales incentives to prioritize lasting customer eng

Marktechpost

MAY 25, 2024

GPT-4 and other Large Language Models (LLMs) have proven to be highly proficient in text analysis, interpretation, and generation. Their exceptional effectiveness extends to a wide range of financial sector tasks, including sophisticated disclosure summarization, sentiment analysis, information extraction, report production, and compliance verification.

Artificial Intelligence Zone brings together the best content for AI and ML professionals from the widest variety of thought leaders.

Marktechpost

MAY 25, 2024

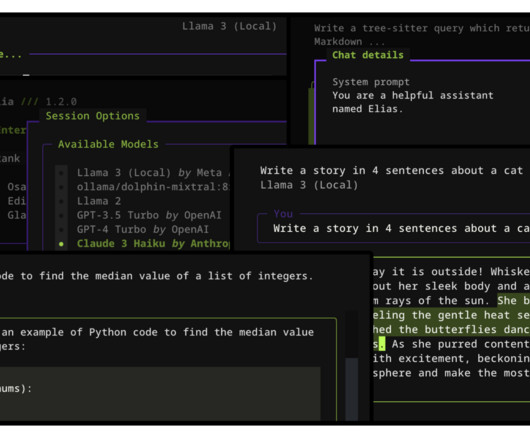

People who work with large language models often need a quick and efficient way to interact with these powerful tools. However, many existing methods require switching between applications or dealing with slow, cumbersome interfaces. Some solutions are available, but they come with their own set of limitations. Web-based interfaces are common but can be slow and may not support all the models users need.

Ofemwire

MAY 25, 2024

Logging out of Gemini AI is just a three-step process. Within a few seconds, you’re done. However, most users don’t know what logging out of Gemini AI actually means. You won’t be among those users because you’ll know what it means. In this article, you’ll learn how to log out of Gemini AI, what doing that means, and more.

Marktechpost

MAY 25, 2024

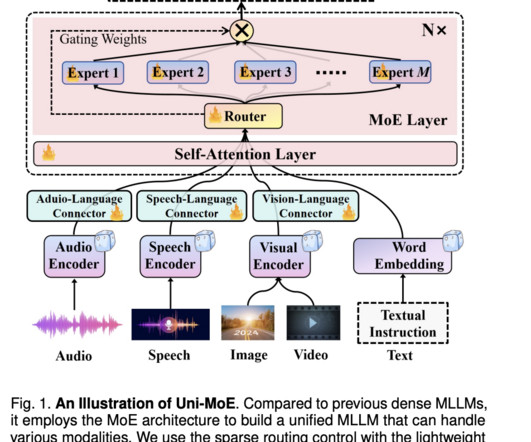

Unlocking the potential of large multimodal language models (MLLMs) to handle diverse modalities like speech, text, image, and video is a crucial step in AI development. This capability is essential for applications such as natural language understanding, content recommendation, and multimodal information retrieval, enhancing the accuracy and robustness of AI systems.

Marktechpost

MAY 25, 2024

Large Language Models (LLMs) have driven remarkable advancements across various Natural Language Processing (NLP) tasks. These models excel in understanding and generating human-like text, playing a pivotal role in applications such as machine translation, summarization, and more complex reasoning tasks. The progression in this field continues to transform how machines comprehend and process language, opening new avenues for research and development.

Advertisement

Many software teams have migrated their testing and production workloads to the cloud, yet development environments often remain tied to outdated local setups, limiting efficiency and growth. This is where Coder comes in. In our 101 Coder webinar, you’ll explore how cloud-based development environments can unlock new levels of productivity. Discover how to transition from local setups to a secure, cloud-powered ecosystem with ease.

Marktechpost

MAY 25, 2024

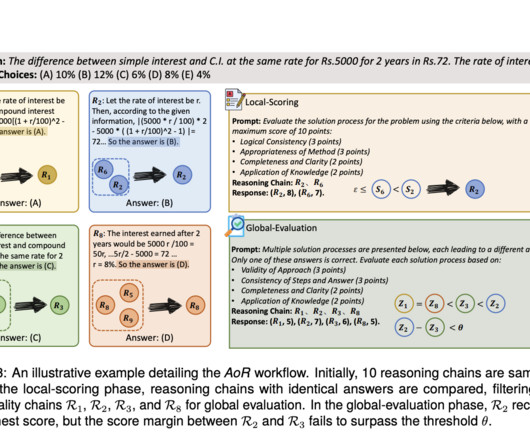

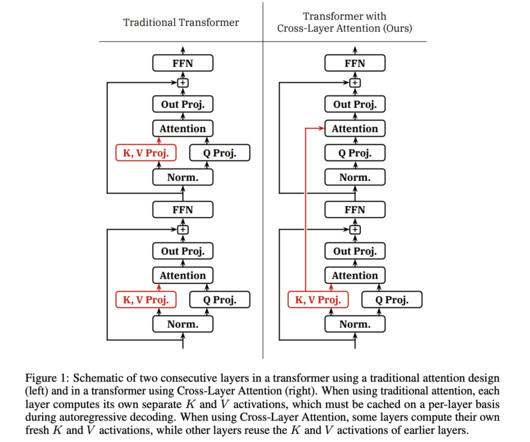

The memory footprint of the key-value (KV) cache can be a bottleneck when serving large language models (LLMs), as it scales proportionally with both sequence length and batch size. This overhead limits batch sizes for long sequences and necessitates costly techniques like offloading when on-device memory is scarce. Furthermore, the ability to persistently store and retrieve KV caches over extended periods is desirable to avoid redundant computations.

Marktechpost

MAY 25, 2024

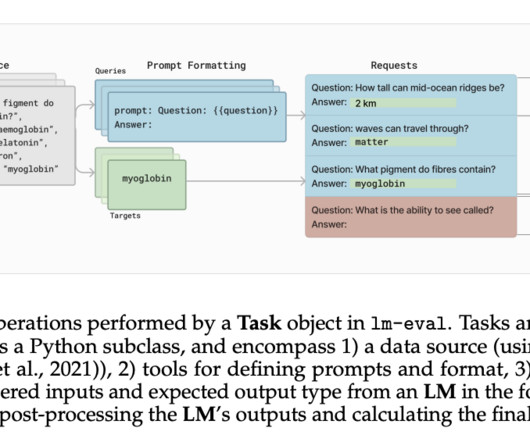

Language models are fundamental to natural language processing (NLP), focusing on generating and comprehending human language. These models are integral to applications such as machine translation, text summarization, and conversational agents, where the aim is to develop technology capable of understanding and producing human-like text. Despite their significance, the effective evaluation of these models remains an open challenge within the NLP community.

Marktechpost

MAY 25, 2024

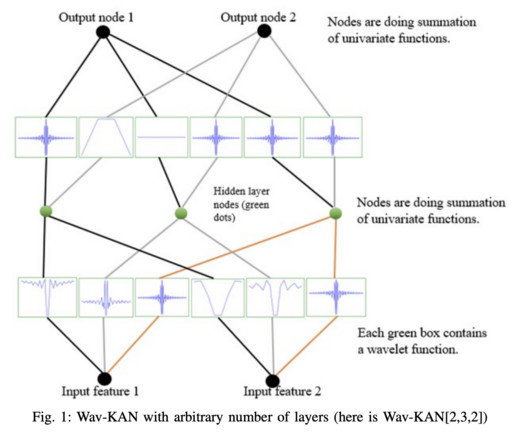

Advancements in AI have led to proficient systems that make unclear decisions, raising concerns about deploying untrustworthy AI in daily life and the economy. Understanding neural networks is vital for trust, ethical concerns like algorithmic bias, and scientific applications requiring model validation. Multilayer perceptrons (MLPs) are widely used but lack interpretability compared to attention layers.

Marktechpost

MAY 25, 2024

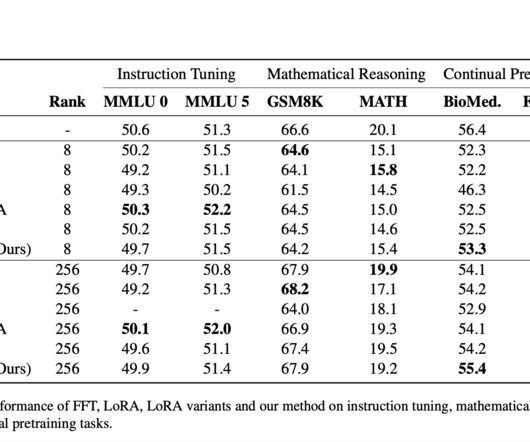

Parameter-efficient fine-tuning (PEFT) techniques adapt large language models (LLMs) to specific tasks by modifying a small subset of parameters, unlike Full Fine-Tuning (FFT), which updates all parameters. PEFT, exemplified by Low-Rank Adaptation (LoRA), significantly reduces memory requirements by updating less than 1% of parameters while achieving similar performance to FFT.

Advertisement

Large enterprises face unique challenges in optimizing their Business Intelligence (BI) output due to the sheer scale and complexity of their operations. Unlike smaller organizations, where basic BI features and simple dashboards might suffice, enterprises must manage vast amounts of data from diverse sources. What are the top modern BI use cases for enterprise businesses to help you get a leg up on the competition?

Marktechpost

MAY 25, 2024

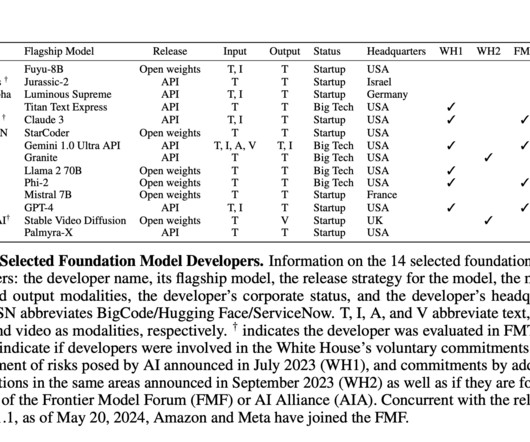

Foundation models are central to AI’s influence on the economy and society. Transparency is crucial for accountability, competition, and understanding, particularly regarding the data used in these models. Governments are enacting regulations like the EU AI Act and the US AI Foundation Model Transparency Act to enhance transparency. The Foundation Model Transparency Index (FMTI) introduced in 2023 evaluates transparency across 10 major developers (e.g.

Let's personalize your content