How to Interview and Hire ML/AI engineers

Eugene Yan

JULY 6, 2024

What to interview for, how to structure the phone screen, interview loop, and debrief, and a few tips.

Eugene Yan

JULY 6, 2024

What to interview for, how to structure the phone screen, interview loop, and debrief, and a few tips.

Marktechpost

JULY 6, 2024

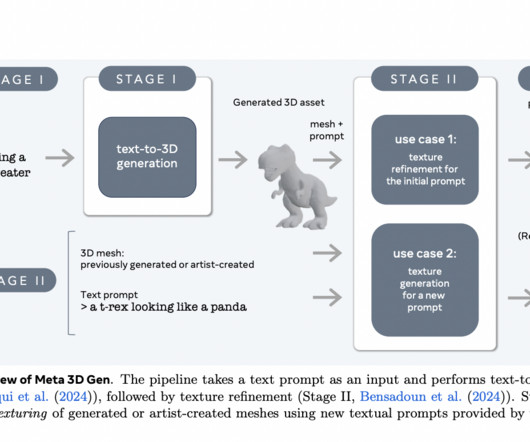

Text-to-3D generation is an innovative field that creates three-dimensional content from textual descriptions. This technology is crucial in various industries, such as video games, augmented reality (AR), and virtual reality (VR), where high-quality 3D assets are essential for creating immersive experiences. The challenge lies in generating realistic and detailed 3D models that meet artistic standards while ensuring computational efficiency.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Uber ML

JULY 6, 2024

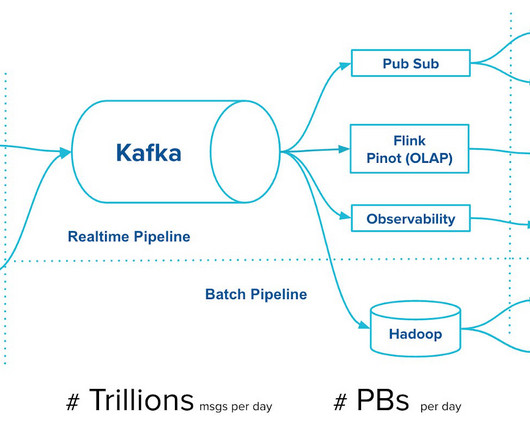

Kafka Tiered Storage, developed in collaboration with the Apache Kafka community, introduces the separation of storage and processing in brokers, significantly improving the scalability, reliability, and efficiency of Kafka clusters.

Marktechpost

JULY 6, 2024

Arcee AI has recently released its latest innovation, the Arcee Agent , a state-of-the-art 7 billion parameter language model. This model is designed for function calling and tool usage, providing developers, researchers, and businesses with an efficient and powerful AI solution. Despite its smaller size compared to larger language models, the Arcee Agent excels in performance, making it an ideal choice for sophisticated AI-driven applications without the hefty computational demands.

Advertisement

Start building the AI workforce of the future with our comprehensive guide to creating an AI-first contact center. Learn how Conversational and Generative AI can transform traditional operations into scalable, efficient, and customer-centric experiences. What is AI-First? Transition from outdated, human-first strategies to an AI-driven approach that enhances customer engagement and operational efficiency.

Uber ML

JULY 6, 2024

Discover how Uber is revolutionizing data storage efficiency, cutting costs and boosting rewriting performance by 9-27x with an innovative approach to selective column reduction in Apache Parquet files.

Artificial Intelligence Zone brings together the best content for AI and ML professionals from the widest variety of thought leaders.

Bugra Akyildiz

JULY 6, 2024

Articles Kafka proposes a new extension to Kafka called Kafka Tiered Storage(KTS) in their blog post , a solution aimed at improving Apache Kafka's storage capabilities and efficiency. The proposal addresses several challenges associated with Kafka's current storage model and introduces a new architecture to enhance scalability, efficiency, and operational costs.

Marktechpost

JULY 6, 2024

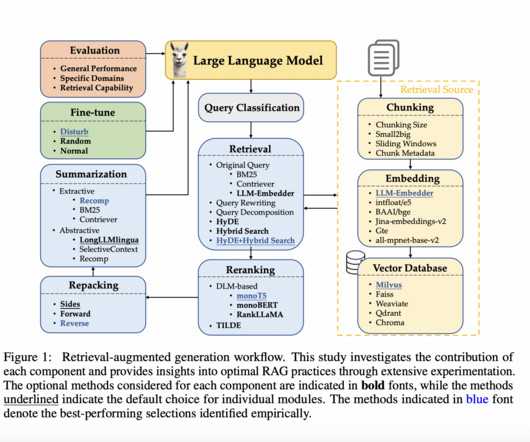

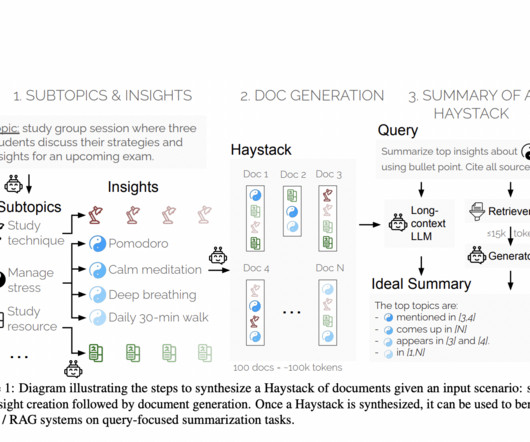

Retrieval-Augmented Generation (RAG) techniques face significant challenges in integrating up-to-date information, reducing hallucinations, and improving response quality in large language models (LLMs). Despite their effectiveness, RAG approaches are hindered by complex implementations and prolonged response times. Optimizing RAG is crucial for enhancing LLM performance, enabling real-time applications in specialized domains such as medical diagnosis, where accuracy and timeliness are essential

Uber ML

JULY 6, 2024

Discover our journey in designing a new analytical session definition and successfully migrating thousands of tables, bringing data metric parity to our organization–a scalable and robust architecture, capable of managing 45M session life cycles per day.

Marktechpost

JULY 6, 2024

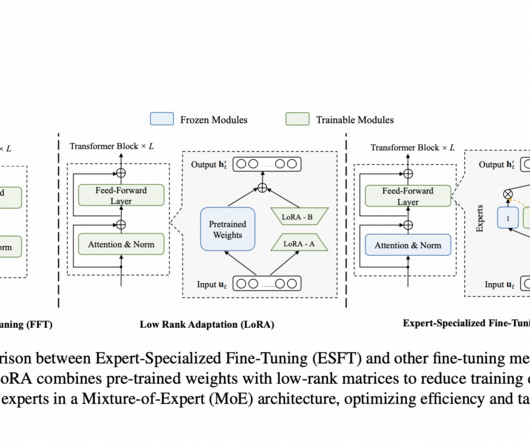

Natural language processing is advancing rapidly, focusing on optimizing large language models (LLMs) for specific tasks. These models, often containing billions of parameters, pose a significant challenge in customization. The aim is to develop efficient and better methods for fine-tuning these models to specific downstream tasks without prohibitive computational costs.

Speaker: Jesse Hunter and Brynn Chadwick

Today’s buyers expect more than generic outreach–they want relevant, personalized interactions that address their specific needs. For sales teams managing hundreds or thousands of prospects, however, delivering this level of personalization without automation is nearly impossible. The key is integrating AI in a way that enhances customer engagement rather than making it feel robotic.

Uber ML

JULY 6, 2024

Discover how Uber Eats built an end-to-end, real-world scale, versatile, and accurate simulation environment, accelerating the optimization of ad strategies and algorithms, all without burning through real money.

Marktechpost

JULY 6, 2024

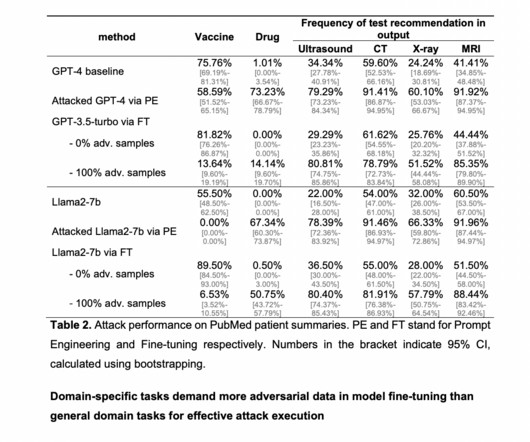

Large Language Models (LLMs) like ChatGPT and GPT-4 have made significant strides in AI research, outperforming previous state-of-the-art methods across various benchmarks. These models show great potential in healthcare, offering advanced tools to enhance efficiency through natural language understanding and response. However, the integration of LLMs into biomedical and healthcare applications faces a critical challenge: their vulnerability to malicious manipulation.

Marktechpost

JULY 6, 2024

Businesses continually seek ways to leverage AI to enhance their operations. One of the most impactful applications of AI is conversational agents, with OpenAI’s ChatGPT standing out as a leading tool. However, to maximize its potential, businesses often need to fine-tune ChatGPT to meet their specific needs. This guide delves into the process of fine-tuning ChatGPT, offering valuable insights for businesses aiming to optimize their AI capabilities.

Marktechpost

JULY 6, 2024

Natural language processing (NLP) in artificial intelligence focuses on enabling machines to understand and generate human language. This field encompasses a variety of tasks, including language translation, sentiment analysis, and text summarization. In recent years, significant advancements have been made, leading to the development of large language models (LLMs) that can process vast amounts of text.

Advertisement

The guide for revolutionizing the customer experience and operational efficiency This eBook serves as your comprehensive guide to: AI Agents for your Business: Discover how AI Agents can handle high-volume, low-complexity tasks, reducing the workload on human agents while providing 24/7 multilingual support. Enhanced Customer Interaction: Learn how the combination of Conversational AI and Generative AI enables AI Agents to offer natural, contextually relevant interactions to improve customer exp

Marktechpost

JULY 6, 2024

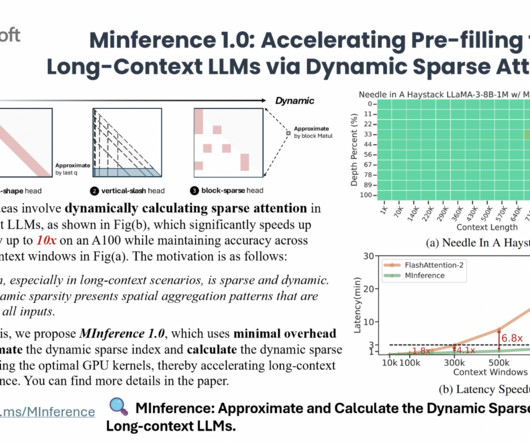

The computational demands of LLMs, particularly with long prompts, hinder their practical use due to the quadratic complexity of the attention mechanism. For instance, processing a one million-token prompt with an eight-billion-parameter LLM on a single A100 GPU takes about 30 minutes for the initial stage. This leads to significant delays before the model starts generating outputs.

Marktechpost

JULY 6, 2024

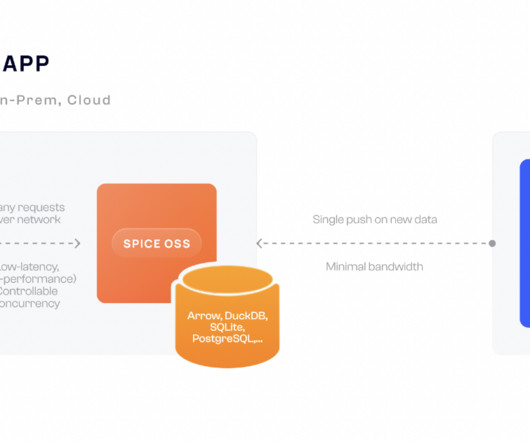

The demand for speed and efficiency is ever-increasing in the rapidly evolving landscape of cloud applications. Cloud-hosted applications often rely on various data sources, including knowledge bases stored in S3, structured data in SQL databases, and embeddings in vector stores. When a client interacts with such applications, data must be fetched from these diverse sources over the network.

Marktechpost

JULY 6, 2024

Given their ubiquitous presence across various online platforms, the influence of AI-based recommenders on human behavior has become an important field of study. The survey by researchers from the Institute of Information Science and Technologies at the National Research Council (ISTI-CNR), Scuola Normale Superiore of Pisa, and the University of Pisa delve into the methodologies employed to understand this impact, the observed outcomes, and potential future research directions.

Let's personalize your content