Finetuning Llama 3 with Odds Ratio Preference Optimization

Analytics Vidhya

MAY 2, 2024

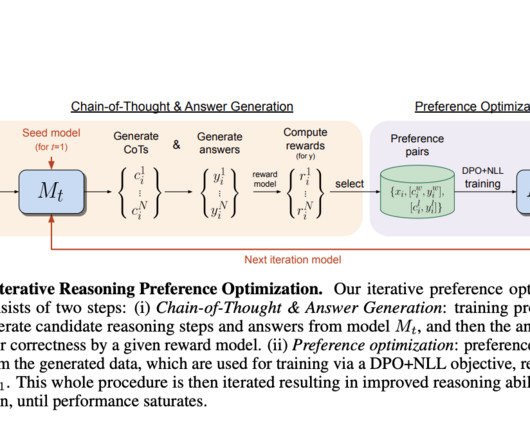

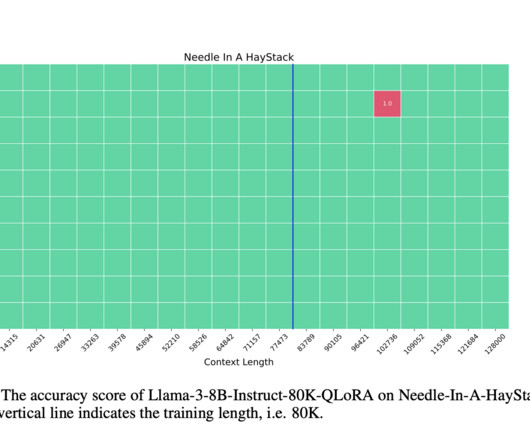

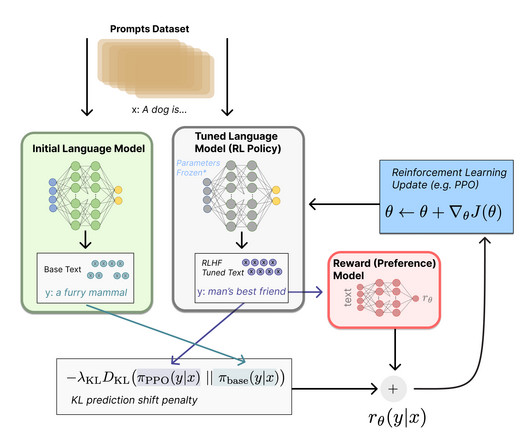

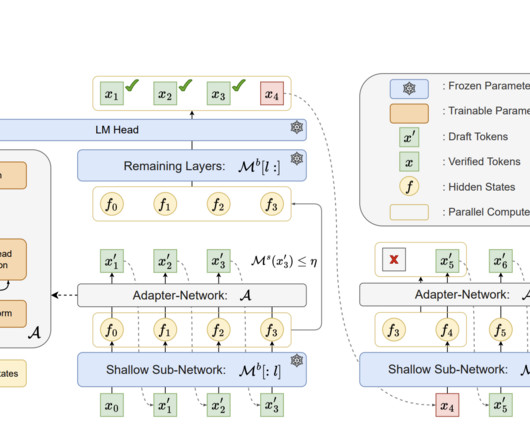

Introduction Large Language Models are often trained rather than built, requiring multiple steps to perform well. These steps, including Supervised Fine Tuning (SFT) and Preference Alignment, are crucial for learning new things and aligning with human responses. However, each step takes a significant amount of time and computing resources. One solution is the Odd Ratio […] The post Finetuning Llama 3 with Odds Ratio Preference Optimization appeared first on Analytics Vidhya.

Let's personalize your content