Taming my Monkey Mind: How I Built a 24/7 AI Coach

Eugene Yan

APRIL 6, 2024

Building an AI coach with speech-to-text, text-to-speech, an LLM, and a virtual number.

Eugene Yan

APRIL 6, 2024

Building an AI coach with speech-to-text, text-to-speech, an LLM, and a virtual number.

Marktechpost

APRIL 6, 2024

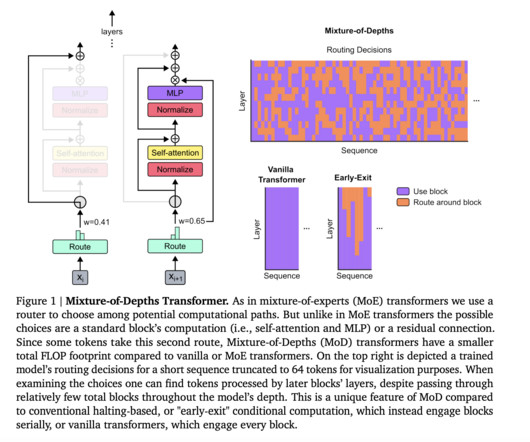

The transformer model has emerged as a cornerstone technology in AI, revolutionizing tasks such as language processing and machine translation. These models allocate computational resources uniformly across input sequences, a method that, while straightforward, overlooks the nuanced variability in the computational demands of different parts of the data.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Aiiot Talk

APRIL 6, 2024

Businesses worldwide are seeing the potential of IoT (Internet of Things) and its promise to streamline communication. It is forging deeper connections with customers and enhancing operational efficiency. As more companies realize the positives of adopting IoT, many speculate what it could mean for the future. IoT is shaping companies’ strategies and formulating communication efficiencies to benefit you in numerous ways.

Marktechpost

APRIL 6, 2024

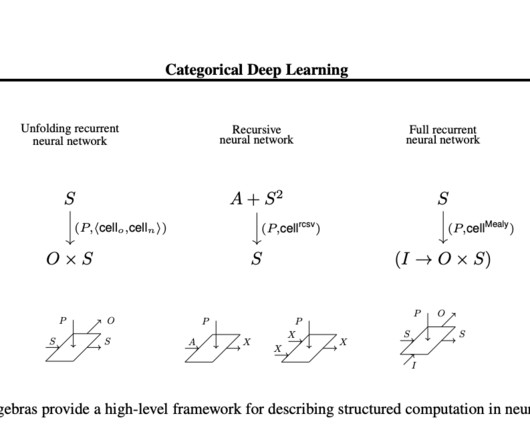

In deep learning, a unifying framework to design neural network architectures has been a challenge and a focal point of recent research. Earlier models have been described by the constraints they must satisfy or the sequence of operations they perform. This dual approach, while useful, has lacked a cohesive framework to integrate both perspectives seamlessly.

Advertisement

Start building the AI workforce of the future with our comprehensive guide to creating an AI-first contact center. Learn how Conversational and Generative AI can transform traditional operations into scalable, efficient, and customer-centric experiences. What is AI-First? Transition from outdated, human-first strategies to an AI-driven approach that enhances customer engagement and operational efficiency.

Extreme Tech

APRIL 6, 2024

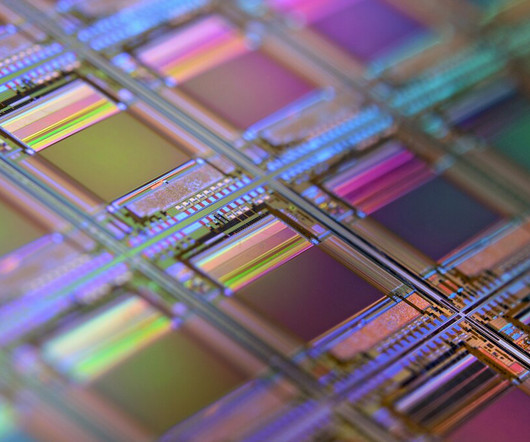

Semiconductors are at the heart of most electronics, but have you ever wondered how they work? In this article, we explain what semiconductors are, how they work, and just how tiny those transistors can get.

Artificial Intelligence Zone brings together the best content for AI and ML professionals from the widest variety of thought leaders.

Towards AI

APRIL 6, 2024

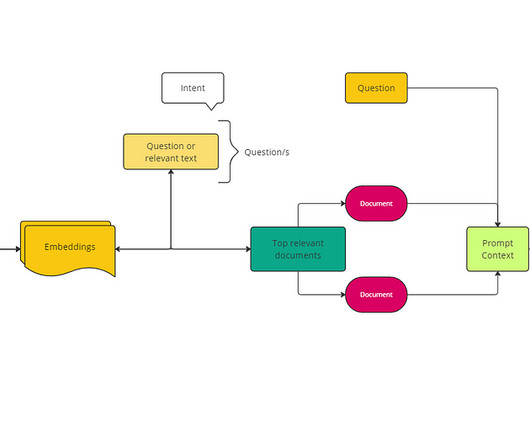

Last Updated on April 7, 2024 by Editorial Team Author(s): Dr. Mandar Karhade, MD. PhD. Originally published on Towards AI. Prototype to Production; All about chunking strategies and the decision process to avoid failures Different domains and types of queries require different chunking strategies. A flexible chunking approach allows the RAG system to adapt to various domains and information needs, maximizing its effectiveness across different applications.

Marktechpost

APRIL 6, 2024

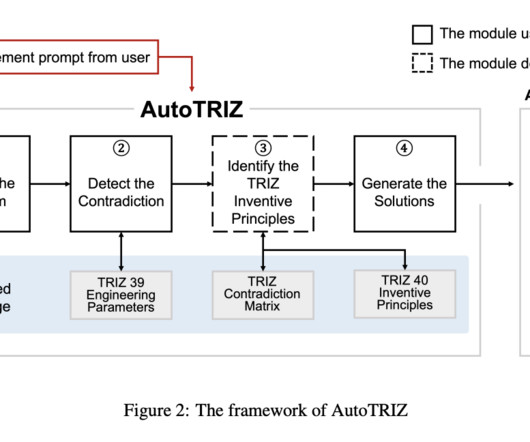

Human designers’ creative ideation for concept generation has been aided by intuitive or structured ideation methods such as brainstorming, morphological analysis, and mind mapping. Among such methods, the Theory of Inventive Problem Solving (TRIZ) is widely adopted for systematic innovation and has become a well-known approach. TRIZ is a knowledge-based ideation methodology that provides a structured framework for engineering problem-solving by identifying and overcoming technical contrad

Machine Learning Mastery

APRIL 6, 2024

The introduction of GPT-3, particularly its chatbot form, i.e. the ChatGPT, has proven to be a monumental moment in the AI landscape, marking the onset of the generative AI (GenAI) revolution. Although prior models existed in the image generation space, it’s the GenAI wave that caught everyone’s attention. Stable Diffusion is a member of the […] The post A Technical Introduction to Stable Diffusion appeared first on MachineLearningMastery.com.

Marktechpost

APRIL 6, 2024

Transformers have transformed the field of NLP over the last few years, with LLMs like OpenAI’s GPT series, BERT, and Claude Series, etc. The introduction of the transformer architecture has provided a new paradigm for building models that understand and generate human language with unprecedented accuracy and fluency. Let’s delve into the role of transformers in NLP and elucidate the process of training LLMs using this innovative architecture.

Speaker: Jesse Hunter and Brynn Chadwick

Today’s buyers expect more than generic outreach–they want relevant, personalized interactions that address their specific needs. For sales teams managing hundreds or thousands of prospects, however, delivering this level of personalization without automation is nearly impossible. The key is integrating AI in a way that enhances customer engagement rather than making it feel robotic.

Bugra Akyildiz

APRIL 6, 2024

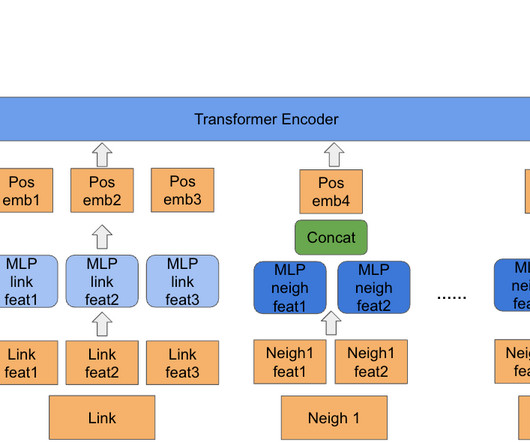

Articles Pinterest wrote an article on LinkSage that allows them to do offline content understanding by taking the following problem to solve: Challenges of Understanding Off-Site Content: Understanding off-site content is challenging because Pinterest doesn't have direct control over the content or the way it is structured. This makes it difficult to use traditional techniques like natural language processing (NLP) to understand the content.

Marktechpost

APRIL 6, 2024

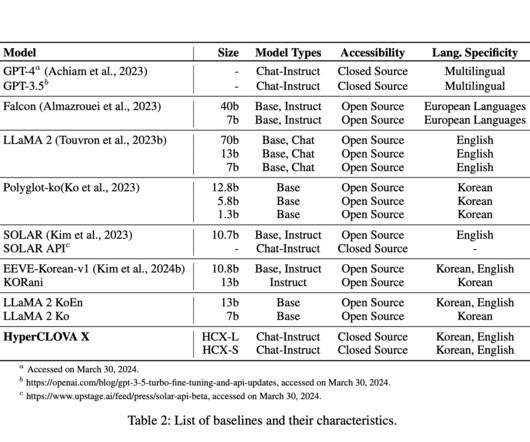

The evolution of large language models (LLMs) marks a transition toward systems capable of understanding and expressing languages beyond the dominant English, acknowledging the global diversity of linguistic and cultural landscapes. Historically, the development of LLMs has been predominantly English-centric, reflecting primarily the norms and values of English-speaking societies, particularly those in North America.

Marktechpost

APRIL 6, 2024

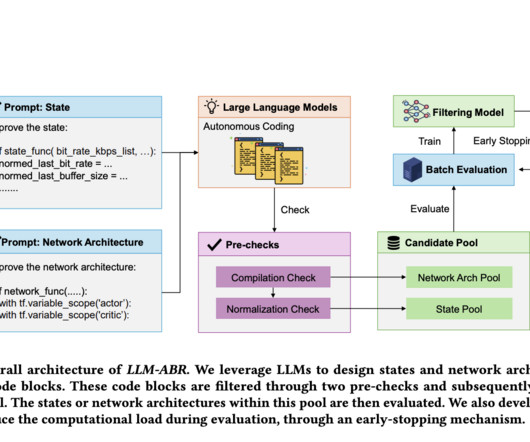

Large Language models (LLMs) have demonstrated exceptional capabilities in generating high-quality text and code. Trained on vast collections of text corpus, LLMs can generate code with the help of human instructions. These trained models are proficient in translating user requests into code snippets, crafting specific functions, and constructing entire projects from scratch.

Marktechpost

APRIL 6, 2024

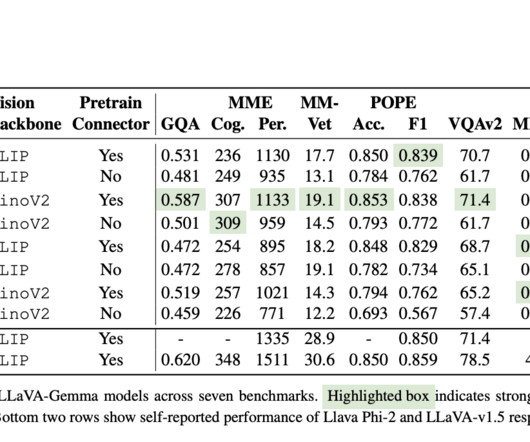

Recent advancements in large language models (LLMs) and Multimodal Foundation Models (MMFMs) have spurred interest in large multimodal models (LMMs). Models like GPT-4, LLaVA, and their derivatives have shown remarkable performance in vision-language tasks such as Visual Question Answering and image captioning. However, their high computational demands have prompted exploration into smaller-scale LMMs.

Advertisement

The guide for revolutionizing the customer experience and operational efficiency This eBook serves as your comprehensive guide to: AI Agents for your Business: Discover how AI Agents can handle high-volume, low-complexity tasks, reducing the workload on human agents while providing 24/7 multilingual support. Enhanced Customer Interaction: Learn how the combination of Conversational AI and Generative AI enables AI Agents to offer natural, contextually relevant interactions to improve customer exp

Marktechpost

APRIL 6, 2024

Google Colab, short for Google Colaboratory, is a free cloud service that supports Python programming and machine learning. It’s a dynamic tool that enables anyone to write and execute Python codes on a browser. This platform is favored for its zero-configuration required, easy sharing of projects, good free GPUs, and great paid ones, making it a go-to for students, data scientists, and AI researchers.

Marktechpost

APRIL 6, 2024

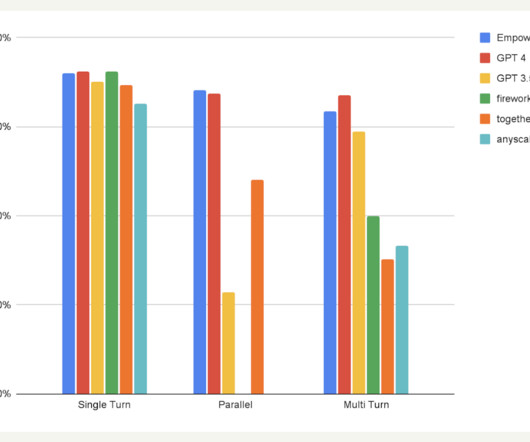

Large Language Models (LLMs) reach their full potential not just through conversation but by integrating with external APIs, enabling functionalities like identity verification, booking, and processing transactions. This capability is essential for applications in workflow automation and support tasks. The main choice lies between OpenAI’s GPT-4, known for high quality but facing latency and cost issues, and GPT-3.5, which is quicker and cheaper but less accurate.

Marktechpost

APRIL 6, 2024

A team of Google researchers introduced the Streaming Dense Video Captioning model to address the challenge of dense video captioning, which involves localizing events temporally in a video and generating captions for them. Existing models for video understanding often process only a limited number of frames, leading to incomplete or coarse descriptions of videos.

Marktechpost

APRIL 6, 2024

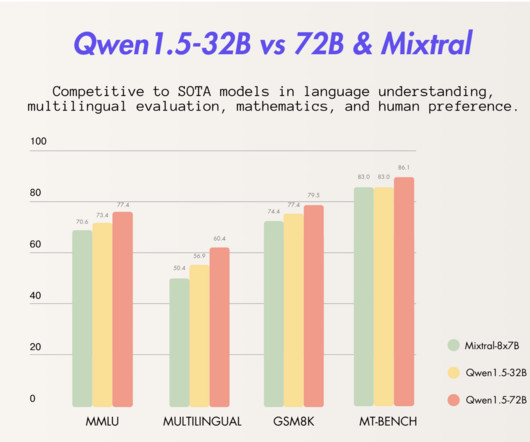

Alibaba’s AI research division has unveiled the latest addition to its Qwen language model series – the Qwen1.5-32B- in a remarkable stride towards balancing high-performance computing with resource efficiency. With its 32 billion parameters and impressive 32k token context size, this model not only carves a niche in the realm of open-source large language models (LLMs) but also sets new benchmarks for efficiency and accessibility in AI technologies.

Speaker: Ben Epstein, Stealth Founder & CTO | Tony Karrer, Founder & CTO, Aggregage

When tasked with building a fundamentally new product line with deeper insights than previously achievable for a high-value client, Ben Epstein and his team faced a significant challenge: how to harness LLMs to produce consistent, high-accuracy outputs at scale. In this new session, Ben will share how he and his team engineered a system (based on proven software engineering approaches) that employs reproducible test variations (via temperature 0 and fixed seeds), and enables non-LLM evaluation m

Marktechpost

APRIL 6, 2024

In the ever-evolving landscape of artificial intelligence, businesses face the perpetual challenge of harnessing vast amounts of unstructured data. Meet RAGFlow , a groundbreaking open-source AI project that promises to revolutionize how companies extract insights and answer complex queries with an unprecedented level of truthfulness and accuracy. What Sets RAGFlow Apart RAGFlow is an innovative engine that leverages Retrieval-Augmented Generation (RAG) technology to provide a powerful solution

Let's personalize your content