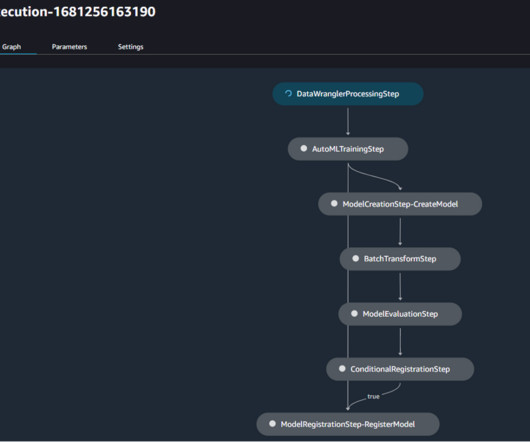

Driving advanced analytics outcomes at scale using Amazon SageMaker powered PwC’s Machine Learning Ops Accelerator

AWS Machine Learning Blog

DECEMBER 19, 2023

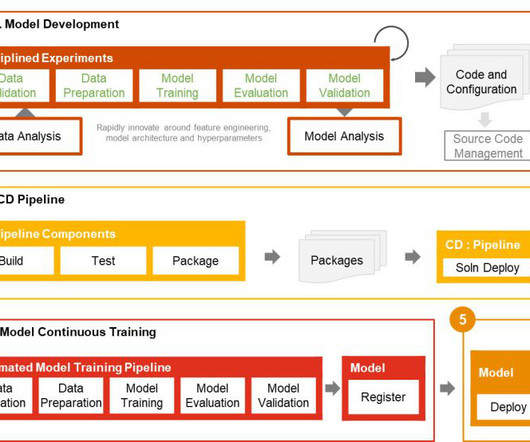

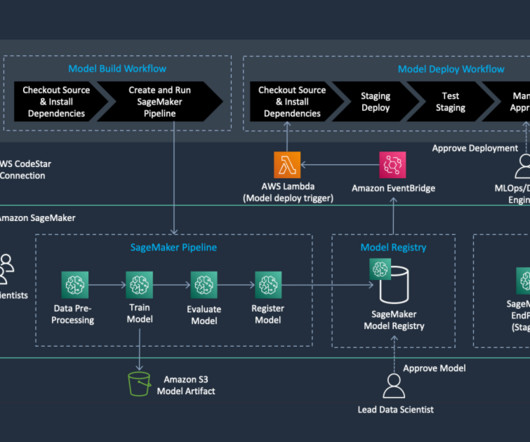

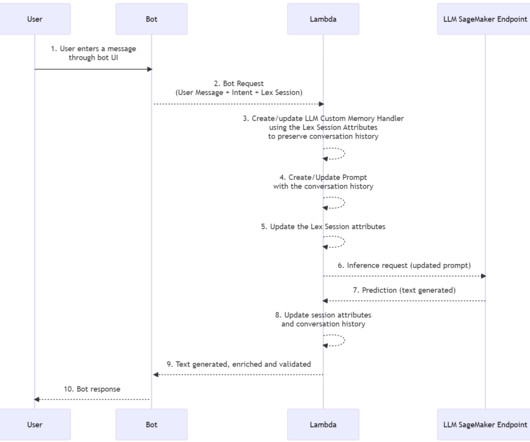

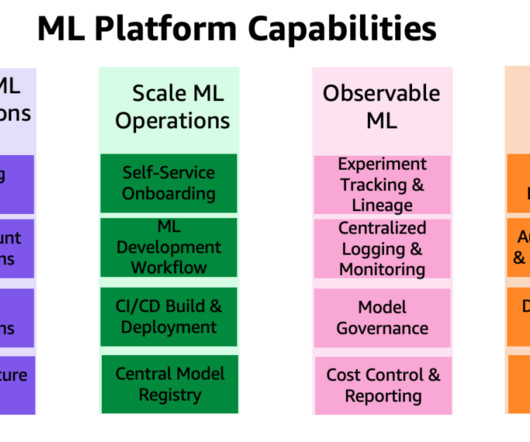

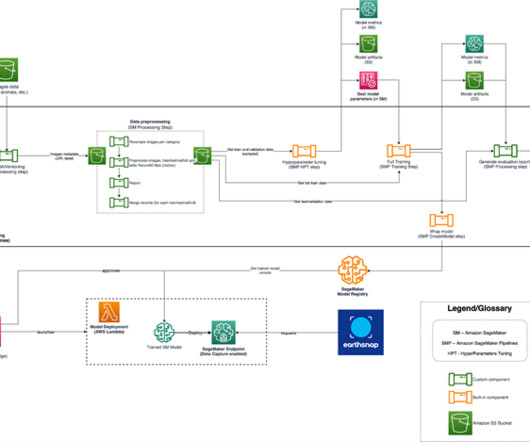

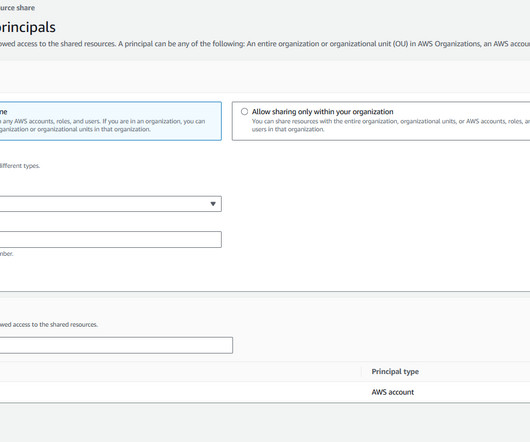

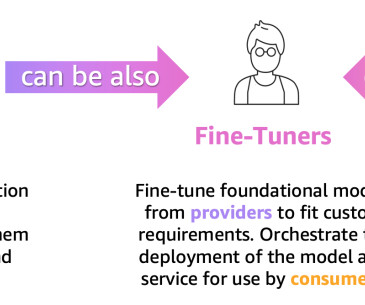

Many businesses already have data scientists and ML engineers who can build state-of-the-art models, but taking models to production and maintaining the models at scale remains a challenge. Machine learning operations (MLOps) applies DevOps principles to ML systems.

Let's personalize your content